Extrapolating from Five Words

If you only get about five words to convey an idea, what will someone extrapolate from those five words? Rather than guess, you can use LLMs to experimentally discover what people are likely think those five words mean. You can use this to iterate on what five words you want to say in order to best convey your intended meaning.

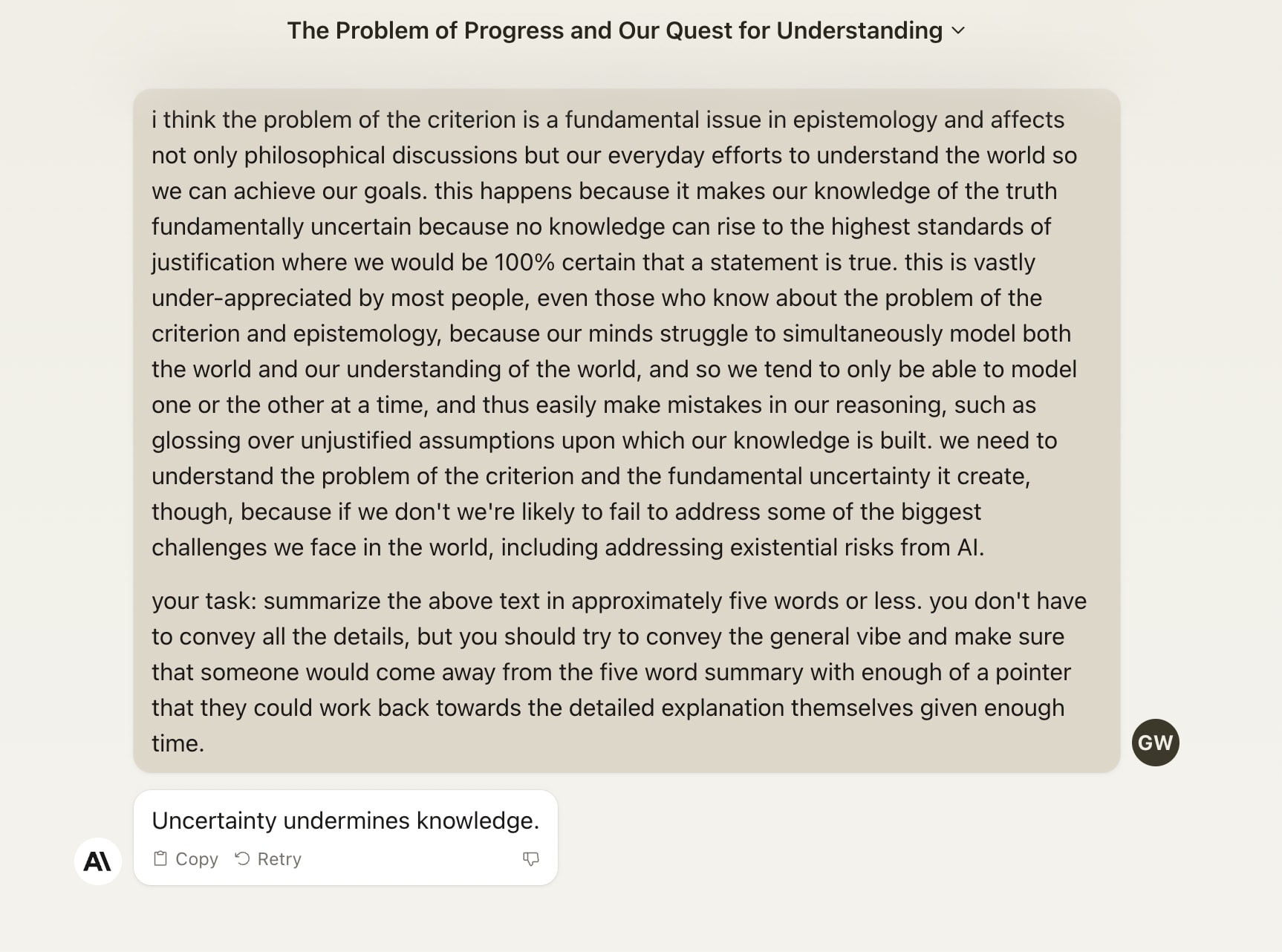

I got this idea because I tried asking Claude to summarize an article at a link. Claude doesn’t follow links, so it instead hallucinated a summary from the title, which was included in the URL path. Here’s an example of it doing this with one of my LessWrong posts:

It hallucinates some wrong details and leaves out lots of details that are actually in the post, but it’s not totally off the mark here. If my ~Five Words were “the problem of the criterion matters”, this would be a reasonable extrapolation of why I would say that.

Rather than using a link, I can also ask Claude to come up what it thinks I would have put in a post with a particular title:

Strangely it does worse here in some ways and better in others. Unlike when it hallucinated the summary of the link, this time it came up with things I would absolutely not say or want someone to come away with, like the idea that we could resolve the problem of the criterion enough to have objective criteria for knowledge.

But maybe prompting it about LessWrong was the issue, since LessWrong puts off a lot of positivists vibes, Eliezer’s claims to the contrary not withstanding. So I tried a different prompt:

This is fine? It’s not great. It sounds like a summary of the kind of essay a bored philosophy undergrad would write for their epistemology class.

Let me try asking it some version of “what do my ~Five Words mean?”:

This is pretty good, and basically what I would expect someone to take away from me saying “the problem of the criterion matters”. Let’s see what happens if I tweak the language:

Neat! It’s picked up on a lot of nuance implied by saying “important” rather than “matters”. This would be useful for trying out different variations on a phrase to see what those small variations change about the implied meaning. I could see this being useful for tasks like word smithing company values and missions and other short phrases where each word has to carry a lot of meaning.

Now let’s see if it can do the task in reverse!

Honestly, “uncertainty undermines knowledge” might be better than anything I’ve ever come up with. Thanks, Claude!

As a final check, can Claude extrapolate from its own summary?

Clearly it’s lost some of the details, particularly about the problem of the criterion, and has made up some things I wasn’t trying to have it get at. Seems par for the course in terms of condensing down a nuanced message into about five words and still having the core of the message conveyed.

Okay, final test, what can Claude extrapolate from typical statements I might make about my favorite topic, fundamental uncertainty?

Hmm, okay, but not great. Maybe I should try to find another phrase to point to my ideas? Let’s see what it thinks about “fundamental uncertainty” as a book title:

Close enough. I probably don’t need to retitle my book, but I might need to work on a good subtitle.

Based on the above experiment in prompt engineering, Claude is reasonably helpful at iterating on summaries of short phrases. It was able to pick up on subtle nuance, and that’s really useful for finding the right short phrase to convey a big idea. The next time I need to construct a short phrase to convey a complex idea, I will likely iterate the wording using Claude or another LLM.

This is interesting, and I’d like to see more/similar. I think this problem is incredibly important, because Five Words Are Not Enough.

My go-to head-canon example is “You should wear a mask.” Starting in 2020, even if we just look at the group that heard and believed this statement, the individual and institutional interpretations developed in some very weird ways, with bizarre whirls and eddies, and yet I still have no better short phrase to express what’s intended and no idea how to do better in the future.

One-on-one conversations are easy mode—I can just reply, “I can answer that in a tweet or a library, which are you looking for?” The idea that an LLM can approximate the typical response, or guess at the range and distribution of common responses, seems potentially very valuable.

One of the things we can do with five words is pick them precisely.

Let’s use “you get about five words” as an example. Ray could have phrased this as something like “people only remember short phrases”, but this is less precise. It leaves you wondering, how long is “short”? Also, maybe I don’t think of myself as lumped in with “people”, so I can ignore this. And “phrase” is a bit of a fancy word for some folks. “Five” is really specific, even with the “about” to soften it, and gives “you get about five words” a lot of inferential power.

Similarly, “wear a mask” was too vague. I think this was done on purpose at first because mask supplies were not ramped up, but it had the unfortunate effect of many people wearing ineffective masks that had poor ROI. We probably would have been better off with a message like “wear an N95 near people”, but at first there was a short supply of N95, and so people might have not worn a mask at all if they didn’t have an N95 rather than a worse mask.

On the other side, “wear a mask” demanded too much. Adding nuance like “when near people inside” would have been really helpful and avoided a lot of annoying masking policies that had little marginal impact on transmission but caused a lot of inconvenience and likely reduced how often people wore masks in situations where they really needed to because they were tired of wearing masks. For example, I saw plenty of people wear masks outside, but then take them off in their homes when with people not in their bubble because they treated masks like a rain coat: no need to keep it on when you’re “safe” in your home, regardless of who’s there.

Cool idea!

One note about this:

Don’t forget that people trying to extrapolate from your five words have not seen any alternate wordings you were considering. The LLM could more easily pick up on the nuance there because it was shown both wordings and asked to contrast them. So if you actually want to use this technique to figure out what someone will take away from your five words, maybe ask the LLM about each possible wording in a separate sandbox rather than a single conversation.

Yep, this is actually how I used Claude in all the above experiments. I started new chats with it each time, which I believe don’t share context between each other. Putting them in the same chat seemed likely to risk contamination from earlier context, so I wanted it to come at each task fresh.

But the screenshot says “if i instead say the words...”. This seems like it has to be in the same chat with the “matters” version.

Yes, you’re right, in exactly one screen shot above I did follow up in the chat. But you should be able to see that all the other ones are in new, separate chats. There was just one case where it made more sense to follow up rather than ask a new question.

Yes, but this exact case is when you say “This would be useful for trying out different variations on a phrase to see what those small variations change about the implied meaning” and when it can be particularly misleading because the LLM is contrasting with the previous version which the humans reading/hearing the final version don’t know about.

So it would be more useful for that purpose to use a new chat.

Note one weakness of this technique. An LLM is going to provide what the average generic written account would be. But messages are intended for a specific audience, sometimes a specific person, and that audience is never”generic median internet writer.” Beware WIERDness. And note that visual/audio cues are 50-90% of communication, and 0% of LLM experience.

You can actually ask the LLM to give an answer as if it were some particular person. For example, just now, to test this, I did a chat with Claude about the phrase “wear a mask”, and it produced different responses when I ask it what it would do upon hearing this phrase from public health officials if it was a scientist, a conspiracy theories, or a general member of the public, and in each case it gives reasonably tailored responses that reflect those differences. So if you know your message is going to a particularly unusual audience or you want to know how different types of people will interpret the same message, you can get it to give you some info on this.

Do you have a link to the study validating that the LLM responses actually match the responses given by humans in that category?

Neato, this was a clever use of LLMs.