Anxiety and computer architecture

Computer architecture seems like a great analogy for some of the anxiety that I feel.

Call stack fatigue

From Call stacks in everyday life

For example, before writing this article I was getting my dog ready for a walk.

But then I realized that the kitchen needed to be cleaned, so I started doing that.

Then I got inspired to write this article, so I started doing that.

Then I got a text from my brother.

I just responded to my brother.

Now I’m going to finish up the article.

After that I’ll finish cleaning the kitchen.

After that I’ll finally go and walk my dog.

At it’s highest point, the leads to a call stack looks something like this:

textBrother

writeArticle

cleanKitchen

getDogReady

Being in a state where your call stack has more than one item on it is something that produces some sort of uneasy feeling for me. Fatigue isn’t really the right word, but let’s call it that.

Less instructions in memory

A computer program is basically a list of instructions. Do this, then that, then that, then that. My mind is often in a similar state, where it has a list of things it needs to execute. As if there is a todo list in my head that I am painfully aware of.

I wish it didn’t work like this. Instead, here is an alternative architecture:

let alive = true;

let currentTask = firstInstruction;

while (alive) {

currentInstruction();

currentInstruction = fetchNewInstruction();

}

Notice the infinite loop? It’s a feature, not a bug.

There is a tight deadline for this feature, and if we don’t meet the deadline, or if it ends up breaking at some point afterwards, the whole company immediately fails.

Anyway, that’s a conversation for another day. I just couldn’t help myself.

For now, what I was pointing at is the fact that there is only one instruction in memory at any given time. That instruction is executed. Then a new instruction is fetched from the database. Then that instruction is executed. Then a new one is fetched. So on and so forth.

Really, it’s quite zen if you think about it. The “mind” is totally focused on the present. There is nothing else in memory available to distract it.

Memory here is analogous to working memory, and the database is analogous to long-term memory. Or, better yet, a piece of paper. Your working memory doesn’t have that other todo list swimming around, clouding your thinking, producing anxiety. It’s just purely focused on the task at hand. Then, only once it is finished, it 1) releases itself from the burden of thinking about this current task, 2) consults a piece of paper to “load” a new task in, and 3) proceeds to give it’s full focus to this new task.

Threads

A computer might look like it is doing multiple things at once when you have Spotify playing music, a file downloading, and a Zoom call open, all at the same time. That is actually just an illusion though. In reality, the computer is spending a few units of time on Spotify, then a few on the file download, then a few on Zoom, then a few on the music again. It’s just that this happens rapidly. So rapidly that you don’t even notice the switching.

My mind can be similar. The frequency of switching is obviously much lower, but it’s high enough where it is unpleasant.

This is a subtley different point from the one in the previous section. In the previous section I was saying that just having other instructions in memory is stressful. Here I am saying that the process of switching between threads is stressful.

Viruses

The solution here seems somewhat simple: just don’t start open threads. Well, part of the problem is that we live in a world with so many stimuli, and it is so tempting to open them. But a bigger part of the problem is that “I” don’t start them.

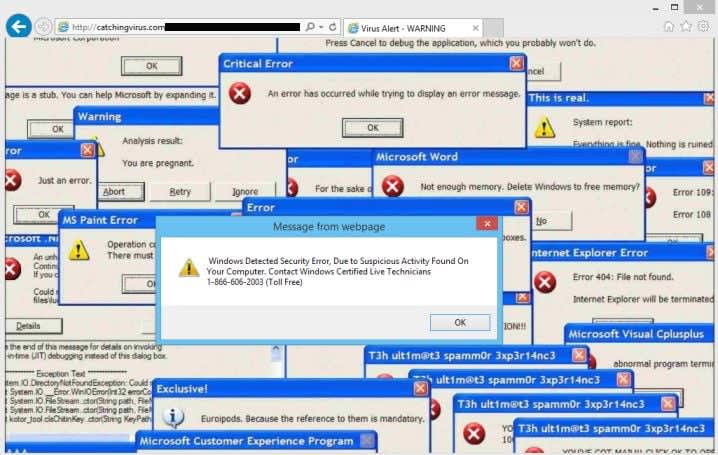

They just… get started. It’s like I’m infected with one of those old school viruses:

Universal

This is post is feeling too grim and personal. That’s not really what I’m going for. I’ve been speaking about the anxiety that I feel, but really I suspect that it’s universal.

The thing with computer architecture is that pretty much all computers are built this way. Some things about them are different, but the actual architecture is the same.

Computers differ in things like how much capacity they have to hold lots of instructions in memory, how many threads they can handle, how quickly they shift between threads, how prone they are to getting viruses. Stuff like that. But given the architecture, you can’t really avoid these things entirely.

Ditto for humans. Given our architecture, I think we’re all destined to have these sorts of feelings. In which case, it’s futile to try to prevent them entirely. And doing so is likely to cause disappointment and frustration, making things worse.

So what is the path forward? Work with what you have. Tweak the parameters that are tweakable, and accept the outcome.

For now at least. I don’t want to discourage anyone from thinking big. Eventually I’d like to see a new architecture.

In this metaphor, GTD works by replacing a recursive call with sequentially processing a list. Instead of “I was going to do A, but I was also going to do B, and I was also going to do C...” you get “tasks = array[A,B,C...Z]; while not empty(tasks): do (pick_one(tasks));”. The same amount of work, but smaller stack.

The solution to multithreading is “remove the distractions”. Duh. (Easier said than done, of course.) But also, if possible, do things sequentially rather than in parallel.

The answer to viruses is some kind of mindfulness. Notice that the thought is a virus, and ignore it; keep ignoring it as many times as necessary.

I’ve become very paranoid about data loss, and I’m blaming my job in software engineering for introducing this fear. I wish my entire life could be recorded (from inside my brain) so that I can rely on an accurate store for recollection, rather than my forgetful mushy brain database. Since that’s not possible yet, I’m living in a kind of desensitised acceptance where I say ‘it’s all ephemeral… so be it.’

I’d dispute this. The thing that just happens is the new thread presenting itself. Opening it is another step. To quote Sam Harris, “one way to get rid of [the desire to check your emails] is to check your emails. Another is to notice the desire to check your emails as a pattern of energy and just let go.”

He tends to describe the human mind in its default state as helplessly bouncing from thought to thought, which to me sounds very similar to your description. But this has a pretty logical remedy in mindfulness.

Also, nitpick:

Isn’t this false nowadays that everyone has multi-core GPUs?

Great point about Sam Harris. I actually went through his meditation course Waking Up. I wanted to mention this point you make in the article but didn’t for whatever reason. In retrospect, I think I should have.

However, I think that there is a very All Therapy Books-y thing going on with meditation. In Scott’s article he talks about how most therapy books essentially claim that they’ve “figured it out”. But if that were true, why do so many patients continue to suffer? I have the same objections to meditation.

Nope, still applies. Even if you have more cores than running threads (remember programs are multi-threaded nowadays) and your OS could just hand one or more cores over indefinitely, it’ll generally still do a regular context switch to the OS and back several times per second.

And another thing that’s not worth its own comment but puts some numbers on the fuzzy “rapidly” from the article:

For Windows, that’s traditionally 100 Hz, i. e. 100 context switches per second. For Linux it’s a kernel config parameter and you can choose a bunch of options between 100 and 1000 Hz. And “system calls” (e.g. talking to other programs or hardware like network / disk / sound card) can make those switches happen much more often.

There are no processes that can run independently on every time scale. There will be many clock cycles where every core is processing, and many where some cores are waiting on some shared resource to be available. Likewise if you look at parallelization via distinct hosts—they’re CLEARLY parallel, but only until they need data or instructions from outside.

The question for this post is “how much loss is there to wait times (both context switches and i/o waits), compared with some other way of organizing”? Primarily, the optimizations that are possible are around ensuring that the units of work are the right size to minimize the overhead of unnecessary synchronization points or wait times.

Interesting. But does this mean “no two tasks are ever executed truly parallel-y” or just “we have true parallel execution but nonetheless have frequent context switches?”

The latter. If you have 8 or 16 cores, it’d be really sad if only one thing was happening at a time.