Moral Mountains

Suppose that someone asks you to help them move in to their new apartment. Should you help them?

It depends, right?

Depends on what?

My answer: moral weights.

Suppose it’s your brother who is the one who asked you. And suppose you really love your brother. You guys are really close. You care about him just as much as you care about yourself. You assign a “moral weight” of 1-to-1. Suppose that helping him move will bring him 10 utilons. If so, you should help him move as long it costs you less than 10 utilons.[1]

Now let’s suppose that it’s your cousin instead of your brother. You like your cousin, but not as much as your brother. You care about yourself four times as much as you care about your cousin. Let’s call this a “moral weight” of 4-to-1, or just “4″. In this situation, you should help him move as long as it costs you less than 2.5 utilons.

Suppose it’s a medium-good friend of yours. You care about yourself 10x as much as you care about this friend. Here, the breakeven point would be when it costs you 1 utilon to help your friend move.[2]

What about if it’s a coworker who is cool but also isn’t your favorite person in the world. You assign a moral weight to them of 100. The breakeven point is 0.1 utilons.

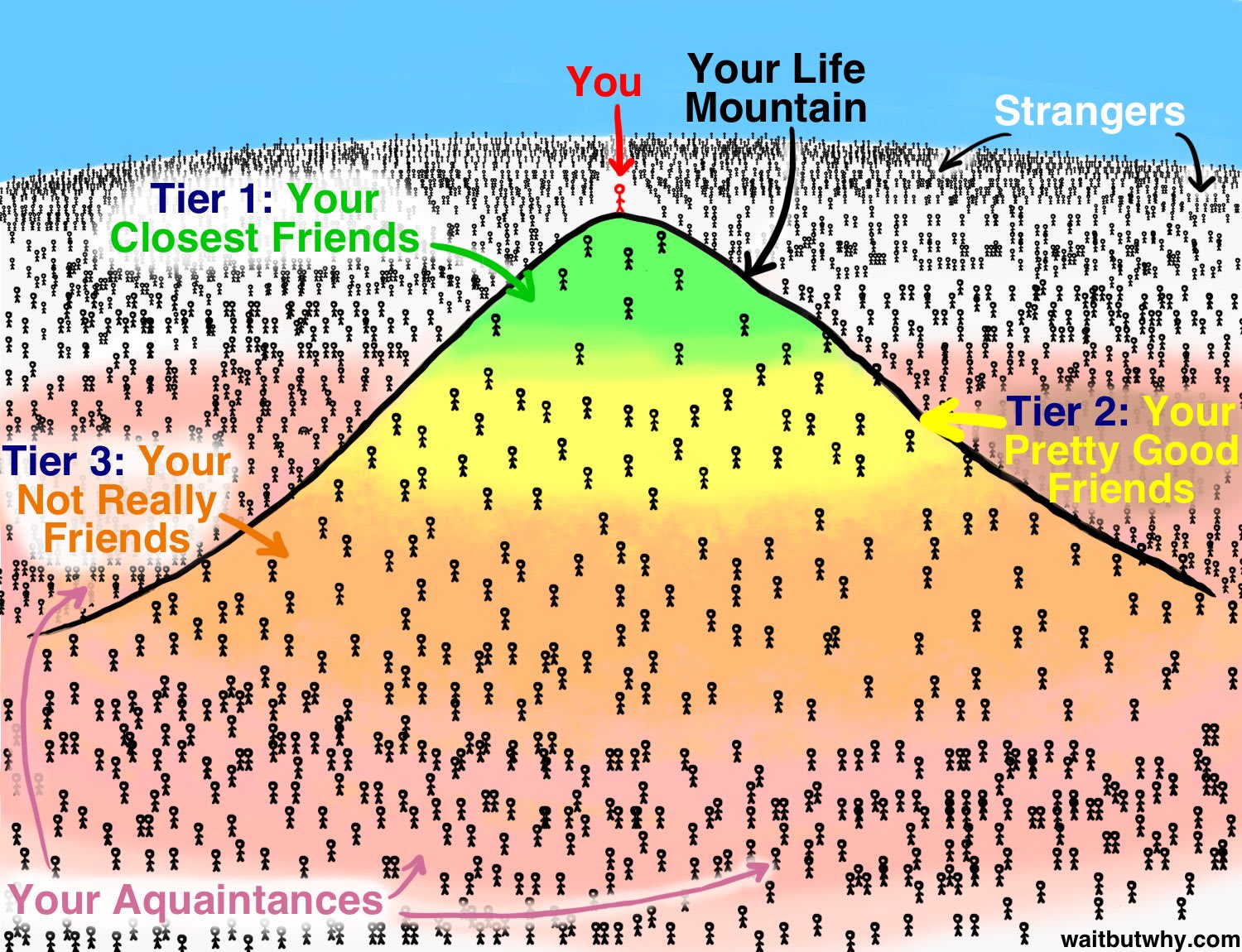

Framing things this way makes me think back to the following diagram from 10 Types of Odd Friendships You’re Probably Part Of:

In that blog post, Tim uses the diagrams to represent how close you are to various people. I think it can also be used to represent how much moral weight you assign to various people.[3] For example, maybe Tier 1 includes people for whom you’d assign moral weights up to 5, Tier 2 up to 30, and Tier 3 up to 1,000.

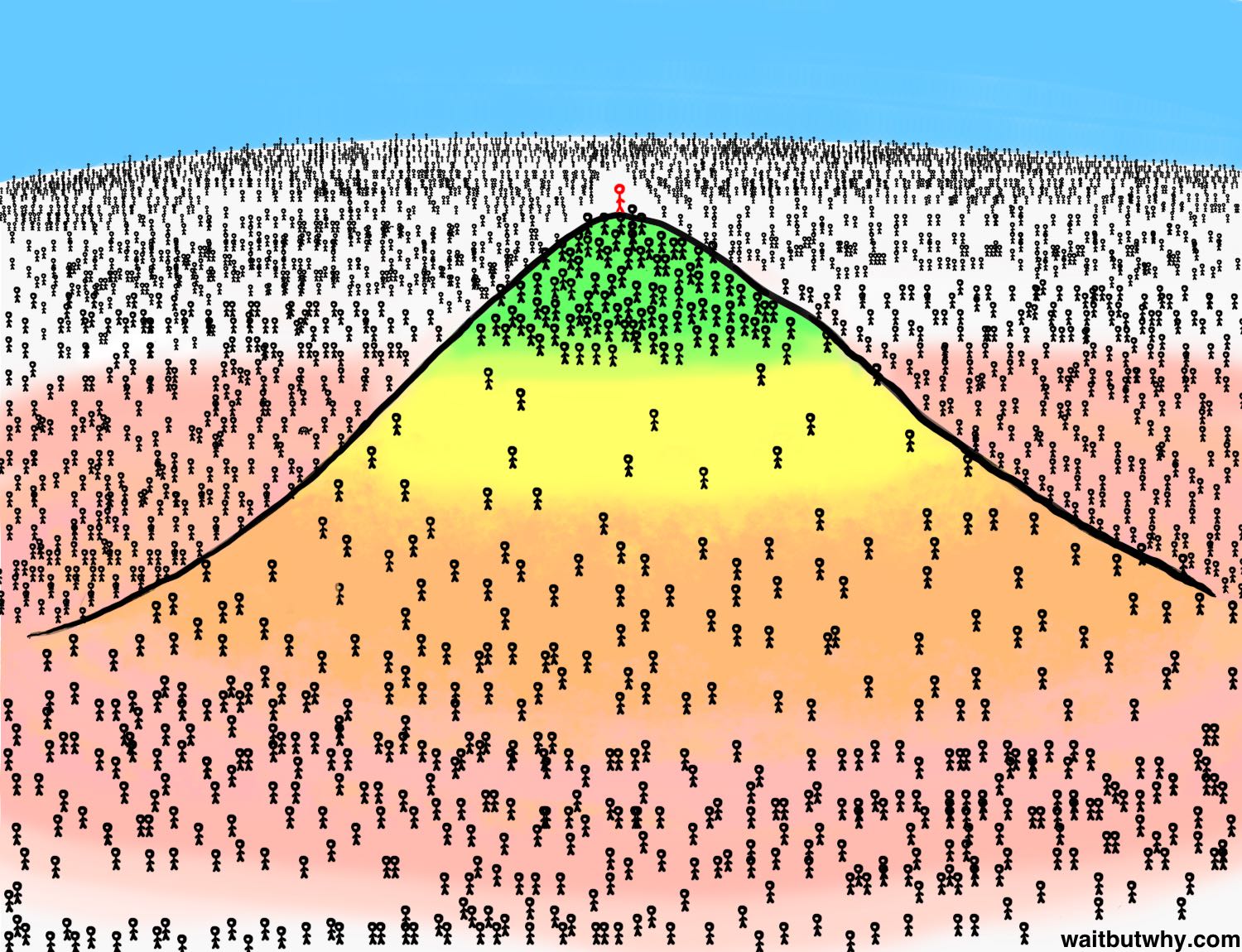

Different people have different “moral mountains”. People like Near-and-Dear Nick care a lot about the people who are close to them, but below that things get a little sparse.

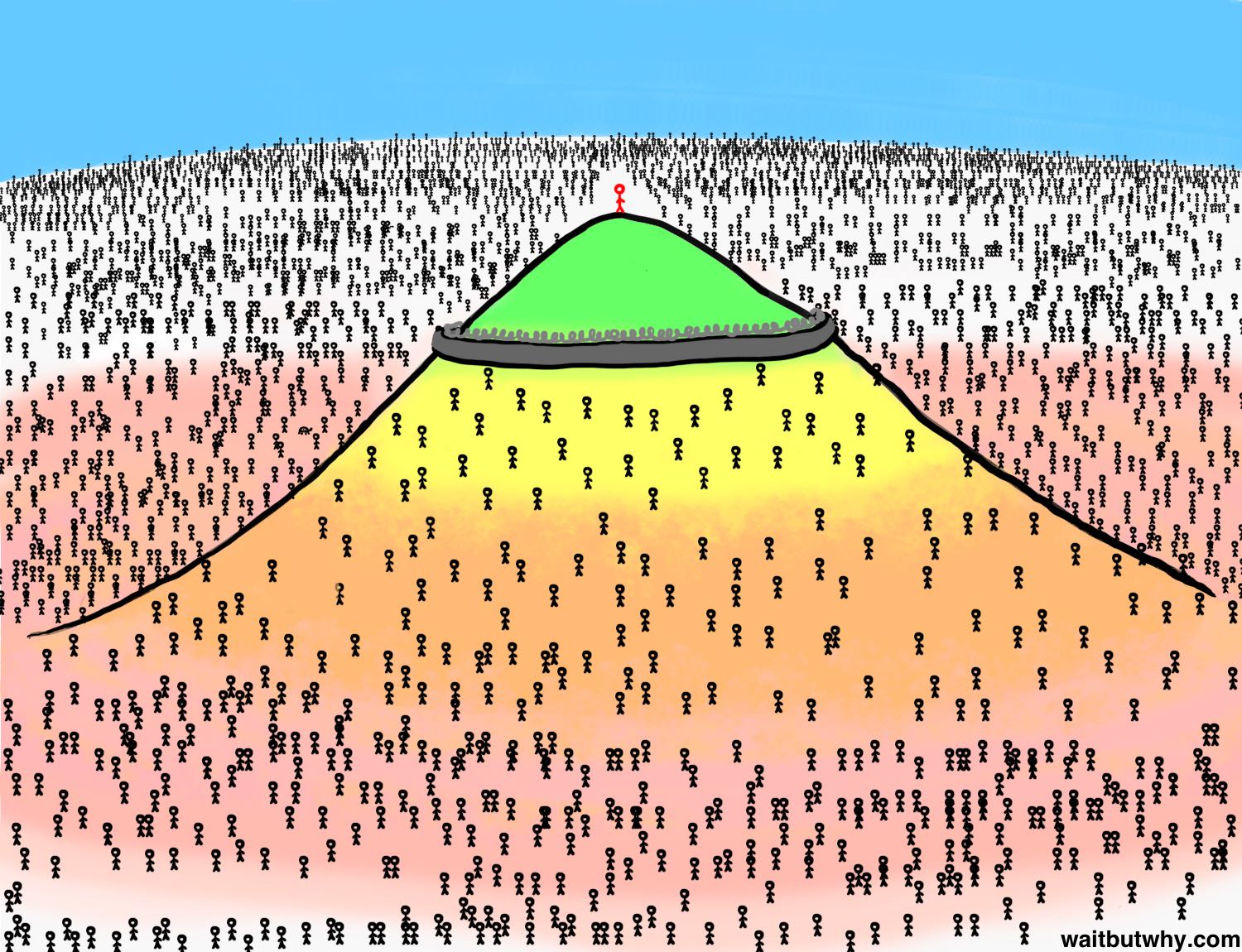

For others like Individualistic Ian, there isn’t anyone who they care about nearly as much as they care about themself, but there are still a decent amount of people in Tier 2 and Tier 3.

Then you’ve got your Selfish Shelby’s who pretty much just think about themself.

If I were able to draw like Tim Urban I would include more examples, but unfortunately I don’t have that skill. But for fun, let’s just briefly think about some other examples, and ask some other questions.

What would Peter Singer’s mountain look like? Is everyone in Tier 1?

What about vegans? Where do they place animals on their mountains?

Longtermists? Where do they place that guy Tron Landale who is potentially living in the year 31,402?

What about nationalism in politics? Consider a nationalistic American. Would they bump someone down a tier for moving Vermont to Montreal? Two tiers? And what if they moved to China? Or what if they were born in China?

I suppose it’s not only

peoplesentient beings that can be placed on the mountain. Some people value abstract things like art and knowledge. So then, how high up on the mountain do those things get placed?

I’ll end with this thought: I think you can probably use these ideas of moral weights and moral mountains to quantify how altruistic someone is. Maybe just add up all of the moral weights you assign? I dunno.

I prefer to think about it visually. Someone like Selfish Shelby isn’t very altruistic because her mountain is pretty empty. On the other hand, someone like Near-and-Dear Nick looks like his mountain is reasonably crowded.

It gets interesting though when you compare people with similarly crowded, but differently shaped mountains. For example, how does Near-and-Dear Nick’s mountain compare to Longtermist Lauren’s mountain? Nick’s mountain looks pretty cozy with all of those people joining him at the summit.

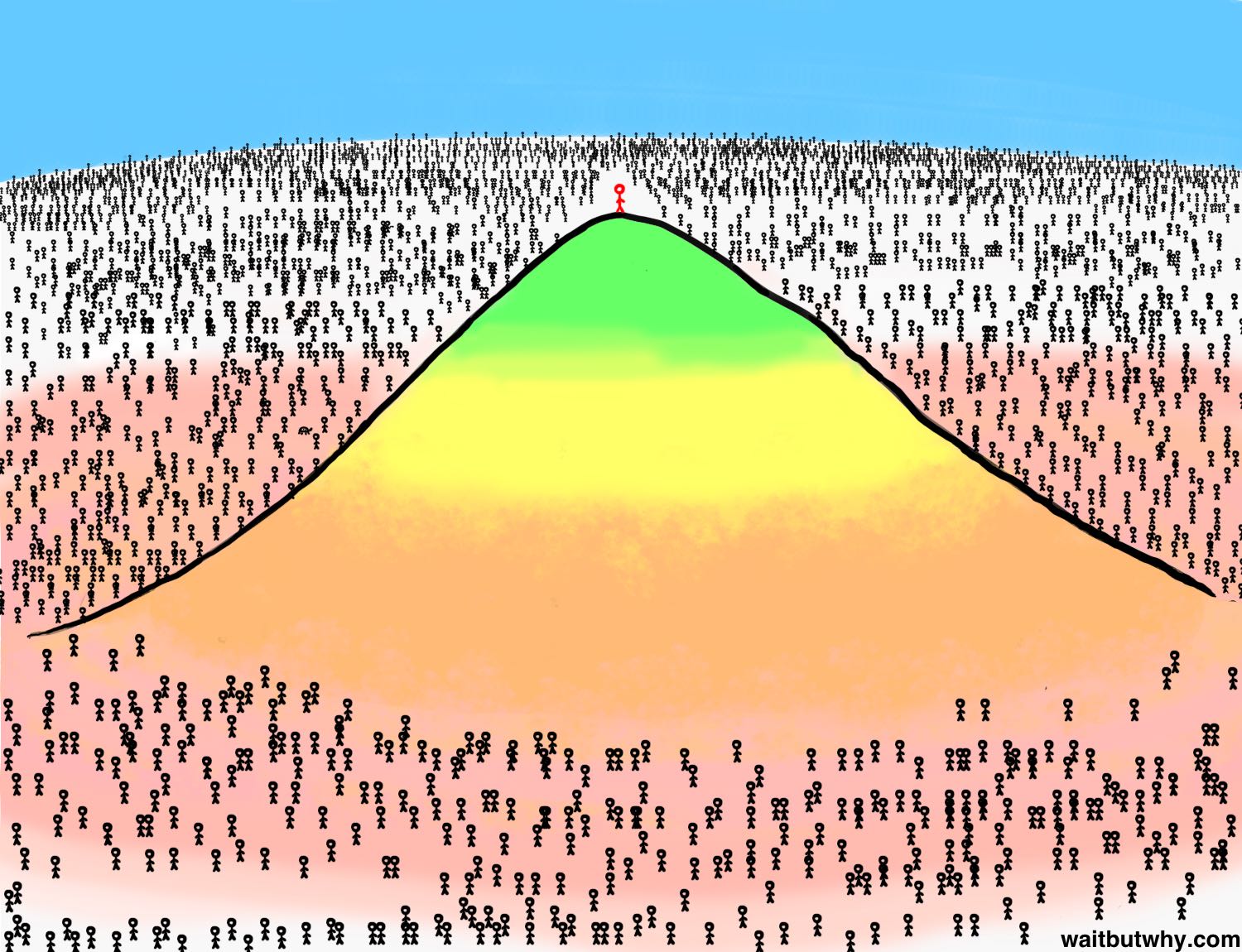

But he’s also got a ton of people on the white parts of land who he basically couldn’t give a shit about. On the other hand, Longtermist Lauren’s mountain looks a little sparse on first glance. There’s definitely no parties going on up at the summit.

But upon closer inspection, that red-tinted fourth tier spans out seemingly forever. And the white section labeled “Strangers” is miles and miles away.

- ^

This is an overly simplified toy example. Let’s assume for the sake of this discussion that there are no other consequences at play here. It’s a clean, one-time, simple trade-off.

- ^

In practice, helping your friend move would often be a win-win situation. Instead of costing you utilons, it’s likely that you generate utilons for yourself. Ie. it’s likely that it’s a pleasant experience. Warm fuzzies and whatnot.

- ^

“Closeness” and “moral weight” are probably pretty related. But still, they are also two distinct and different concepts.

Maybe “altruistic” isn’t the right word. Someone who spends every weekend volunteering at the local homeless shelter out of a duty to help the needy in their community but doesn’t feel any specific obligation towards the poor in other areas is certainly very altruistic. The amount that one does to help those in their circle of consideration seems to be a better fit for most uses of the word altruism.

How about “morally inclusive”?

I dunno. I feel a little uncertain here about whether “altruistic” is the right word. Good point.

My current thinking: there are two distinct concepts.

The moral weights you assign. What your “moral mountain” looks like.

The actions you take. Do you donate 10% of your income? Volunteer at homeless shelters?

To illustrate the difference, consider someone who has a crowded moral mountain, but doesn’t actually take altruistic actions. At first glance this might seem inconsistent or contradictory. But I think there could be various legitimate reasons for this.

One possibility is simply akrasia.

Another is if they don’t have a lot of “moral luck”. For example, I personally am pretty privileged and stuff so it wouldn’t be very costly for me to volunteer at a homeless shelter. But for someone who works two jobs and has kids, it’d be much more costly. And so we could each assign the exact same moral weights but take very different actions.

The shape of the mountain. For example, Longtermist Lauren might not donate any money or help her friends move, but she takes part in various “swing for the fences” types of projects that will almost certainly fail, but if they succeed they’d have an enormous impact on the world and it’s future.

All of that said, (1) feels like a better fit for the term “altruistic” than (2). And really, (2) doesn’t feel to me like it should be incorporated at all. But I’m not really sure.

I think this is oversimplified in a number of dimensions, to the point that I don’t think the framing makes particularly useful predictions or recommendations.

Particularly missing is types of caring, and scalability of assistance provided. I simply can’t help very many people move, and there’s almost zero individuals who I’d give cash to in amounts that I donate to larger orgs. Also, and incredibly important, is the idea of reciprocity—a lot of support for known, close individuals has actual return support (not legibly accountable, but still very real).

This makes it hard for me to put much belief behind the model when you use terms like “altruistic” or “selfish”. I suspect those terms CAN have useful meaning, but I don’t think this captures it.

I’m not sure what you mean. Would you mind elaborating?

I’m not understanding what the implications of these things are.

I think that would be factored in to expected utility. Like by helping a friend move, it probably leads to the friend wanting to scratch your back in the future, which increases the expected utility for you of helping them move.

I think I’m mostly responding to

I disagree that this conception of moral weights is directly related to “how altruistic” someone is. And perhaps even that “altruistic” is sufficiently small-dimensioned to be meaningfully compared across humans or actions.

Sure, but so does everything else (including your expectations of stranger-wellness improvement).

Hm. I’m trying to think about what this means exactly and where our cruxes are, but I’m not sure about either. Let me give it an initial stab.

This feels like an argument over definitions. I’m saying “here is way of defining altruism that seems promising”. You’re saying “I don’t think that’s a good way to define altruism”. Furthermore, you’re saying that you think a good definition of altruism should be at least medium-dimensioned, whereas I’m proposing one that is single-dimensioned.

Context: I don’t think I have a great understanding of the A Human’s Guide To Words sequence. I’ve spent time reading and re-reading it over the years, but for some reason I don’t feel like I have a deep understanding of it. So please point out anything you think I might be missing.

My thoughts here are similar to what I said in this comment. That it’s useful to distinguish between 1) moral weights and 2) actions that one takes. It’s hard to say why exactly, but it feels right to me to call (1) “altruism”.

It’s also not immediately clear to me what other dimensions would be part of altruism, and so I’m having trouble thinking about whether or not a useful definition of altruism would have (many) other dimensions to it.

Good point. Maybe it’d make sense then to, when thinking about altruism, kinda factor out the warm fuzzies you get from doing good. Because if you include those warm fuzzies (and whatever other desirable things), then maybe everything is selfish.

On second thought, maybe you can say that this actually hits on what altruism really is: the amount of warm fuzzies you get from doing good to others.

I think we’re disagreeing over the concepts and models that have words like “altruism” as handles, rather than over the words themselves. But they’re obviously related, so maybe it’s “just” the words, and we have no way to identify whether they’re similar concepts. There’s something about the cluster of concepts that the label “altruistic” invokes that gives me (and I believe others, since it was chosen as a big part of a large movement) some warm fuzzies—I’m not sure if I’m trying to analyze those fuzzies or the concepts themselves.

I (weakly) don’t think the utility model works very well in conjunction with altruism-is-good / (some)warm-fuzzies-are-suspect models. They’re talking about different levels of abstraction in human motivation. Neither are true, both are useful, but for different things.

Gotcha. If so, I’m not seeing it. Do you have any thoughts on where specifically we disagree?

My own thoughts and reactions are somewhat illegible to me, so I’m not certain this is my true objection. But I think our disagreement is what I mentioned above: Utility functions and cost-benefit calculations are tools for decisions and predictions, where “altruism” and moral judgements are orthogonal and not really measurable using the same tools.

I do consider myself somewhat altruistic, in that I’ll sacrifice a bit of my own comfort to (I hope and imagine) help near and distant strangers. And I want to encourage others to be that way as well. I don’t think framing it as “because my utility function includes terms for strangers” is more helpful nor more true than “because virtuous people help strangers”. And in the back of my mind I suspect there’s a fair bit of self-deception in that I mostly prefer it because that belief-agreement (or at least apparent agreement) makes my life easier and maintains my status in my main communities.

I do agree with your (and Tim Urban’s) observation that “emotional distance” is a thing, and it varies in import among people. I’ve often modeled it (for myself) as an inverse-square relationship about how much emotional investment I have based on informational distance (how often I interact with them), but that’s not quite right. I don’t agree with using this observation to measure altruism or moral judgement.

That makes sense. I feel like that happens to me sometimes as well.

I see. That sounds correct. (And also probably isn’t worth diving into here.)

Gotcha. After posting and discussing in the comments a bit, this is something that I wish I had hit on in the post. That even if “altruism” isn’t quite the right concept, there’s probably some related concept (like “emotional distance”) that maps to what I discussed in the post.