Preface: this blog post was written for a slightly more general audience than LessWrong. It’s likely that you may already be familiar with many of the ideas explored in this post, but I thought some of you may find it interesting anyway.

I’ve been exploring the idea that our conscious minds, including self-awareness and subjective experience, might be a type of computer program.

Of course, the idea that our brains might be some kind of computer is nothing new, but I think we almost have all of the pieces necessary to make sense of that idea. I believe that if consciousness is a type of software, then this could explain why and how consciousness arises from unconscious matter. That is, if consciousness is computable, we can explain where it comes from because that would mean it would be theoretically possible for a regular, everyday computer to produce consciousness.

I think consciousness — and our subjective experience of it — may arise because our minds are a machine that can model reality. This model includes ourselves—both our external reality and a picture of ourselves as an agent within it. Our minds make use of an internal language of thought to create a representation of our knowledge and senses, enabling us to plan, think, and interact with the world.

The idea that the representation of information in our minds could give rise to the phenomenological character of consciousness can be traced back to the philosophy of John Locke, who encapsulated this concept as “ideas in the mind, qualities in bodies.” His ideas were the starting point for the eventual foundation for the Language of Thought Hypothesis, which proposes that thought utilizes a language-like structure.

I hypothesize that embedding spaces — a tool from the field of machine learning — are the mental language used by our minds to represent and operate on information and that the nature of embedding spaces may explain the structure and character of subjective experience.

More on what embedding spaces are later, but first, what would it mean for consciousness to be computable?

On Computation

A Turing machine is an abstract model of a machine that can perform computation, meaning that it can transform input information into outputs. Addition is a simple example of computation; two numbers are combined to produce a sum. Software is just a list of instructions for performing computation, and implementations of Turing Machines are what we use to execute these instructions. A problem is computable if it can be solved on a Turing machine in a finite amount of time.

Smartphones, computers, and tablets are all examples of Turing machines encountered frequently in everyday life. Despite their ubiquity, they are not the only machines capable of performing computation. People have made computers out of all kinds of things, sometimes just for fun, and sometimes out of things that maybe they shouldn’t.

To name just a few, there are computers made out of DNA, water droplets, redstone, mirrors and light, fluidics, gears and levers, and yes, even quantum physics.

The beautiful insight about Turing machines is that, despite all of the disparate parts and mechanisms that can comprise these machines, they all have the same theoretical capabilities. A computer program that can run on any one of these machines can run on all of them. This property is known as universality and is one of the foundational concepts that make modern computing so versatile.

But wait, you may be asking—how could mere software produce the subjective experience of being alive? It’s easy to imagine crunching numbers in a computer to calculate your credit card balance, but how could something as sterile as math performed by a machine come close to what it feels like to be you?

What About Qualia?

Qualia is the term used to describe the subjective parts of our experiences—the redness of red, for example. When light is emitted at a particular wavelength, we experience the color red, but the light itself has nothing to do with redness. Light has a wavelength, but it itself does not have a color. Qualia is a phenomenon that exists only in our minds, and its existence is baffling.

Where could phenomenological consciousness possibly come from? Where, exactly, in my body should I look if I want to find the experience of the fresh smell after rain, or the sense of relief upon arriving home after a long trip, or the exhilaration of winning a race? No one has yet managed to find the wanderlust gyrus in our brains. Where could such strange and unique phenomena possibly be found?

The thought that started me down this current line of inquiry is that we have no way to tell if our experiences are real or not. Our sensory experiences are all we can ever possibly know about the world; there is no way to teleport information about the outside world directly into our minds. Our eyes, ears, and the rest of our senses are all we have, and that’s that. Our experience is necessarily virtual.

So in a sense, we can’t even know if we are real or not. A simulated agent would not know they were simulated. If you don’t know you’re simulated, you can’t know it doesn’t make sense for you to be having feelings right now because you aren’t real.

The ultimate source of my senses is irrelevant to my personal phenomenological experience. It could be real, it could be a simulation, or it could be a dreaming iguana. That doesn’t change the fact that I have to go to work tomorrow.

So if it doesn’t matter to me whether I’m simulated or not, maybe I could be simulated? That’s something a little more concrete we can start to work with. If consciousness is a simulation, and if this simulation is also computable, well, then—that would really be something. The principle of universality would indicate that this software could run on any computer.

A computer doesn’t have to be conscious, so if software can somehow become conscious, then that could explain how qualia arise in a universe that otherwise seems totally indifferent to it. Unconscious computers could be running conscious programs. The idea that consciousness works the same whether it’s running on your brain or a computer or something else is known as substrate-independence.

How could such experiences possibly be computable? Insights into the inner workings of sophisticated machine learning models, such as Large Language Models (LLMs), might suggest a path forward.

Representing Reality

LLMs encode information using a fascinating concept called embedding spaces. These embedding spaces are mathematical constructs: multi-dimensional spaces where words, phrases, or even entire sentences can be mapped to vectors. These vectors are not merely placeholders; they capture the semantic essence of the information they represent, forming dense, rich clusters of related concepts.

Embedding spaces have many interesting properties. Relationships between vectors in an embedding space are self-consistent in a way that makes a form of conceptual arithmetic possible. For example, I can use an embedding space to convert words into vectors. If I convert the words for “man”, “woman”, and “king” into these embedded vectors, I can fairly reliably find the vector for “queen” by taking the embedding vector for “king”, subtracting “man” from it, and then adding “woman”.

Embedding spaces are constructed as an automatic part of the process of creating models like LLMs, and they are the result of the model finding a compact representation that captures the essence of the data they are exposed to. I find it strange that this works at all, but it clearly does. This is the reason for the power behind LLMs like ChatGPT. Its embedding space accurately captures relationships between concepts, and these relationships can be manipulated using natural language.

I have a strong suspicion that embedding spaces are very similar to the way humans process information. If this is true, our senses, emotions, and even our assessment of how good or bad a given situation is for us could all be layered on top of each other by projecting them into an embedding space and fusing them into a unified sensory whole.

For a more detailed exploration of how our sensory perception might be constructed, I highly recommend Steve Lehar’s Cartoon Epistemology. For now, I’ll share just one of his illustrations:

Different senses are layered on top of each other to create the combined whole of our experience

I think this is a good visualization of what it means to construct an experience out of many different pieces. Each of these circles represents some aspect of our conscious experience. Let’s expand this metaphor and think of them as TVs for experiencing qualia. We are the observer in our head watching all of these TVs. In the above illustration, the leftmost TV is sight, the next TV is the location of your body, and the third is how you’re planning on moving.

If I change the channel on one of these TVs I get a different experience. So with the vision TV, Channel 1 might be looking up at a redwood tree, Channel 27 might be the view on a tropical beach in the Bahamas, Channel 391 might be an Antarctic cruise, and so on. Every visual experience imaginable has a channel. The same goes for all of our senses.

When I say a vector in an embedding space represents some concept, I’m talking about the channels on these TVs. A vector is just a list of numbers, so you can think of this sequence of numbers as instructions for what channel to set all of your qualia TVs to. So experience [4, 12, 2] might be a scene from my 11th birthday party, and [34, 1, 5] might be a bite from my last meal.

Let’s examine the dial on the qualia TV a little closer to get a better idea of what’s going on there.

This isn’t a bad representation of what is going on in an embedding space. A vector can be thought of as a direction, so we can think of the direction the dial is pointing as directly corresponding to the direction the vector is pointing in the embedding space. Every direction in an embedding space corresponds to a different experience. If we look long enough we can find a direction in the embedding space that corresponds to any experience we can imagine.

See this post if you would like a more detailed examination of how LLMs use embedding spaces to encode information.

Let’s walk through some more examples of how embedding spaces could be used to represent qualia.

I don’t experience all of my senses in serial. If I’m enjoying a concert I don’t check my vision TV for a bit and then turn to my hearing TV to have a listen. I experience my qualia TVs all at once simultaneously, layered on top of each other. These layers combine to form a gestalt experience—a complete fusion of many disparate experiences into a single entity. This kind of fusion of many different seemingly unrelated concepts is exactly what embedding spaces do. Through the magic of linear algebra, any number of concepts can be layered on top of each other.

When I smell a fresh batch of cookies, my nose sets my scent TV to just the right channel for how strong the scent of the cookies is, what kind of cookies they are, whether or not they were burned, and so on. Most of the time we don’t have voluntary control over the dials on our qualia TVs. However, there are a few exceptions.

When I remember my 11th birthday party I’m looking up memory [4, 12, 2] from before. All I would need to do to re-experience it is set all the dials on the appropriate TVs. However, my memory isn’t perfect, so I might misremember a number or two and get a memory that is slightly different from the real event.

When I recall that memory, it’s fighting over the channels on the TV with my real senses, and the result is something like a channel that is 5% my birthday memory and 95% my actual senses. This is why my memory manifests as a ghostly impression of that experience. I can probe certain aspects of what that experience was like, but I can’t quite fully experience it again.

Dreams are another story. While I’m asleep most of my real senses are almost entirely numb, so these ghostly impressions can make much more of an impact because they aren’t competing for my attention with my real senses. Sometimes these impressions can feel as real my waking senses. On rare occasions, I am able to dream lucidly, and I manage to find the remotes for at least a few of my qualia TVs and change them to whatever channel I want to experience. Usually, this is so exciting that it immediately causes me to wake up.

I imagine certain psychoactive substances do something like taking a magnet to a qualia TV or changing the channel. The normal display is altered by an outside force, and the result is strange and unusual and otherwise outside the reach of normal experience. These qualia TVs also cover much more than just our raw senses, in fact, they cover just about every aspect of our awareness and understanding of the world. If we flip through the channels we’ll find channels for concepts like melancholy or triumph or anything. Having your visual TV tuned to one of these channels would be very strange indeed.

I imagine that during childhood development, I developed my own personal set of qualia TVs the same way an LLM learns an embedding space to represent its experiences. My daily experiences also make changes to these qualia TVs to keep them tuned and up to date. If I learn a new concept it gets added to my personal embedding space, which then automatically relates it to every other concept I already know.

Since my personal embedding space is constructed using my own experiences, my qualia TVs probably all show something slightly different than everyone else’s. My channel for the color blue is probably not on the same channel as yours. Some people have damaged TVs that prevent them from experiencing some sense, such as brain damage that interferes with vision.

As a quick aside, a recent study was able to use AI to reconstruct what participants were seeing by reading their brain scans. To make this work the authors had to create a specific mapping for each individual study participant between the embedding space used by their brain and the embedding space used by the machine learning model. The resulting reconstructions accurately matched the semantic content and layout of the original images.

As you can see, it’s far from perfect, but still, plucking concepts and imagery straight out of someone’s brain is quite a feat. To me, this suggests that there is at least some correspondence between the way human brains and machine learning models represent information. It also suggests that individual human brains represent the same information in different ways.

When we communicate ideas to each other, we take a concept from our own embedding space, convert it into speech, and then the listener decodes the speech and encodes it into their own personal and slightly different embedding space—a lossy and approximate process. We can gesture at an idea, but we can never truly make someone experience it exactly the way we do.

Language itself is an embedding space. Words are used to encode concepts, and these concepts can be used as conceptual building blocks that we can recombine to produce new concepts. Language, as a clear example, illustrates the subtle differences that can exist between similar embedding spaces. These days it’s easy to translate between different languages, but the exact connotations in each version are slightly different, so some concepts are more difficult to translate or better represented in one language instead of another.

But what is an embedding space doing exactly? How do we get from the raw light signals received from our eyes to a visual field that accurately represents the nature of our surroundings?

Seeing Data

I propose that our minds utilize embedding spaces to effectively project raw data into a form akin to a holographic simulation of our environment. Let’s use an image file as an analogy.

The data in an image file is just a list of numbers, such as these. These numbers fully encapsulate the information content of the image but in a much less accessible format. You might be able to study the numbers in an image file long enough to get a sense of what kind of image it captures, but it would be tedious, difficult, and slow.

Instead, what we need to do is project this data into a different form to make it more accessible. When we assemble the numbers in an image file into an actual image, what we’re doing is rearranging it into a new representation that is better suited to the task at hand: interpreting what the data means. Let’s walk through this step by step.

To start with, we can rearrange this long 1D list of numbers into 2D. Now the numbers we’re examining are all in the position that roughly corresponds to the actual position of that color in the image. The numbers in the top left correspond to the pixels in the top left of the image, and the numbers in the bottom right correspond to the pixels in the bottom right of the image.

This is already much easier to interpret! Now we can understand some broad features of the image at a glance. For example, we can at least tell there’s some kind of shape in the middle of the image. If we studied it harder we might even be able to tell there are in fact two shapes there.

But why stop there? The current shape of the image is skewed because we’re currently representing the color value of each pixel in the image with three numbers corresponding to the red, green, and blue components of the pixel. If we combine these three numbers to create a color and then draw a little colored square in the corresponding location of the image, what we get is the fully reconstructed image!

All three of these representations are isomorphic—they all represent the exact same information. However, one of these representations is much more useful for interpreting the data. Instead of having to study an image number by number, we can just look at the image and take in the whole thing all at once.

I think this is exactly what qualia is. It’s what happens when you can see data, and this is accomplished by projecting it into a format you can intuitively understand and then taking it in all at once.

But what kind of transformation is this projection applying? What does it look like? Let’s think about it for a minute. Certain representations of information can compress the salient features in a way that makes interpreting the information much more straightforward. If I wanted to create an accurate representation of reality using raw sensory data, what shape should I project it into?

Why not employ a tiny scale model of reality? Given that the goal is to comprehend the outside world, it seems intuitively appropriate to represent the data in the form of a model that mirrors reality.

Simulating Experience

How does this translate to our subjective experience? I suspect that your raw sensory data is projected into this scale model to create something like a hologram that looks and feels just like reality. Throughout the rest of this post, I will refer to this as a qualia hologram.

You—as in you you, as in the entity having the experience of reading this blog post and not the animatronic meat robot carrying you around inside its skull—are a part of this scale model. You are the tiny ethereal homunculus at the center of this model, and you serve as the film onto which this information is projected.

This is the source of the subjective part of your experience; you are the observer that revels in the deep reds of sunsets, inhales the salt of the ocean, feels the softness and grit of sand under your feet and between your toes, and is soothed by the crashing of the waves. These things are not just numbers to you. They are real experiences that communicate an enormous amount of information about the outside world in an extremely concise manner.

With this simulation, you can experience something that is quite an astonishingly good representation of reality. It’s so seamless that it usually feels like we are experiencing the outside world directly, and the fact that it is actually just a representation of reality is quite unintuitive. The fact is, though, it is not possible for any observer to directly observe reality. Our senses are all we have to make sense of the world, but that’s enough for us to do a pretty bang-up job, most of the time.

The limitations of this simulation are most apparent when we encounter illusions that can fool our model of reality. The rubber hand illusion is a technique where a participant can be tricked into believing a rubber hand is their real hand. This is accomplished by hiding the participant’s real hand from view and then touching it and the rubber hand at the same time. The combined visual and tactile evidence provided by watching the rubber hand is eventually strong enough to overcome the doubts caused by their other senses, and eventually, the participant can come to feel like the rubber hand is their real hand.

Illusions like these are the exception that proves the rule though, and most of the time our experience is nearly flawless. How do our minds accomplish such a thing? For that, we will need a more complete picture of how these computations are carried out.

A Computational Model of Consciousness

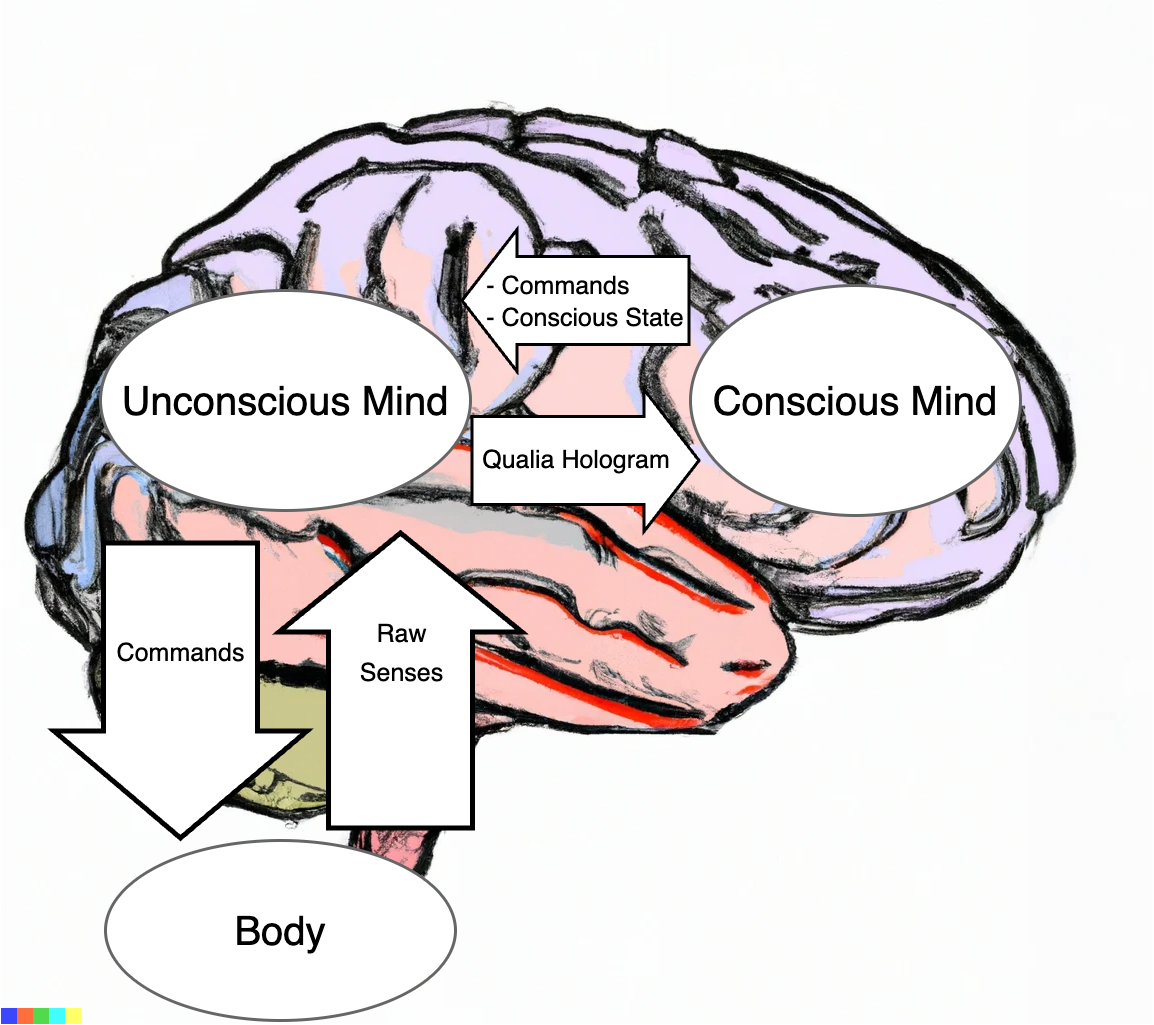

I don’t claim to know exactly how the human brain and its consciousness are put together in reality, but I think I can see how a computational model of consciousness could be constructed. I think the first step is to split the mind into two different machines—the conscious and the unconscious.

The unconscious mind has several roles, the first of which is to construct a qualia hologram by using an embedding space to project raw sense data. This data structure encodes all of our subjective knowledge about the state of the world, including ourselves. The use of an embedding space makes it so that any piece of data we add to this model can automatically be related to any and every other piece of data in the model.

The second role of the unconscious mind is to take higher-level commands from the conscious mind (i.e. “Raise your right hand,”) and convert these into the explicit steps necessary to control our bodies. This definition of the unconscious mind is straightforward to model on a Turing machine; it performs a relatively fixed set of transformation operations on its input data to enrich it and represent it in a useful way. There are likely other roles the unconscious mind plays, but I think they can be modeled in a similar manner.

I think the conscious mind might be a fully-fledged Turing machine, meaning that it can be used to simulate any other piece of software. It might not seem like a Turing machine at first glance, but let’s walk through how we can model it as one. Our short-term memory serves as our scratchpad for performing arbitrary computations. Instead of operating on 0s and 1s like a traditional computer, it operates on embeddings. It receives the qualia hologram generated by the unconscious mind as input.

As we have previously established, embeddings are a form of language and can be used to encode and manipulate any concept. Each operation our conscious mind performs is a form of embedding arithmetic. For example, information can be pulled from the qualia hologram and stored in short-term memory, and can then be compared to other embeddings to calculate information about them.

Let’s see how this would work with some examples. It is often said that we can hold about seven things at a time in our short-term memory. Chunking is an observation from psychology that notes it is much easier for us to remember information if we can group it into chunks.

For example, a long string of numbers, such as 258,921,089,041 is very difficult to remember if we try to recall each individual digit. This number has 12 digits, so it probably exceeds our short-term memory capacity if we represent each digit individually. However, if we remember the number in chunks, say by breaking it up on the commas − 258 921 089 041 - it becomes much easier to remember because now we only have four pieces of information to remember. In my quick 1 minute self-test of this, I got maybe 25% of the digits right when I tried to remember the whole number and around 80% of them right when I used chunking.

I think chunking involves creating a combined embedding from a subset of the data. For example, the vectors for 2, 5, and 8 can be combined into a single vector, and this vector is then stored in short-term memory.

But wait, you might be asking, embedding spaces can encode any number of concepts in a single embedding. Why can’t we just shove all of the digits into a single embedding? The reason is that our embeddings have a finite resolution. They can represent many concepts at once, but the more concepts you pack into a single embedding the more difficult it is to pull the individual components back out again when you need them. Beyond a certain number of concepts you probably would not be able to pull any of the original concepts out and would be left with a vague amalgamation of all of them.

Let’s try a broader example—cooking. To cook a meal you take some inputs (the raw ingredients), and you perform a sequence of operations on them (chopping, boiling, etc.) to transform them into dinner. When I cook, I usually need an explicit list of instructions to follow; in this case, a recipe is just a type of program.

The first step in cooking is to check the list of ingredients. My conscious mind would read in the text for the list of ingredients from its qualia hologram, and store these in my short-term memory. If the list is too long to fit them all, I might have to do this in steps where I remember which ingredient I’m currently on, and then load them into short-term memory one at a time.

Confirming I have the proper ingredients is a straightforward operation using embedding operations. I can open my refrigerator and start comparing the embeddings of objects in my visual field to the embedding for the ingredient I’m looking for. Once I find something close enough, I can check it off the list and move on to the next ingredient.

If I find I am missing an ingredient, I might have to start improvising. I can project a simulation of what the meal would be like without this ingredient. If it’s a critical component, and the quality of the meal would suffer too much without it, then I might have to adjust my plans and try something else. Otherwise, I can check my refrigerator for a substitute ingredient using the same process from before, except this time I am looking for very similar embeddings, rather than nearly exact matches.

When it comes time to actually cook the meal, I am back to following a mostly predefined sequence of operations. When I read that I need to chop the onions, I can use embeddings to envision a plan in my mind where I hold an onion with one hand and cut it with a knife using the other. This plan is communicated back down to my unconscious mind, which figures out the right sequence of motor operations to carry it out.

A key part of this process is that it is goal driven. I am envisioning in my mind what I want the finished product to be like; while I am working on it, I can check my progress to see if I’m on track to meet my goal. My qualia hologram fully encodes the details of the taste of the dish, and I can compare this to an embedding from my memory of what I expect this meal to taste like. If it’s way off I might conclude that I need to revisit the instructions to ensure I didn’t skip a step. If it’s close I might conclude that it just needs more salt to bring it closer to my goal. The ability to simulate potential future outcomes is crucial for our ability to search for and construct subgoals that bring us closer to our final goals.

If the meal turns out well I can positively reinforce the actions I took to produce it, making it more likely that I can repeat the feat in the future. If the meal is a bust I can maybe at least glean what not to do next time around, and so negatively reinforce the actions I took this time around. In either case, I can learn something from the experience and try to improve my behavior for the next time.

Each of these steps can be broken down into simple atomic operations. These include reading embeddings from our qualia hologram, comparing multiple embeddings to each other using our short-term memory, sending output commands to be processed by the unconscious mind, and so on. In other words, each of these steps is computable, and can therefore be modeled by a Turing machine.

I think this is where the adaptability and robustness of intelligent life come from. Our conscious minds can learn, stumble into, or invent new programs to create new concepts or algorithms, and these can be refined to construct more and more sophisticated versions of each.

Self Representation

I think there’s one more piece of this puzzle that merits mentioning. One of the most mysterious aspects of our consciousness is our self-awareness. This seems to be a truly unique and important capability that we possess, although some particularly clever animals like ravens also seem to have similar (albeit perhaps lesser) capabilities. In any case, it still seems pretty miraculous. If our minds are truly nothing more than software, how could software become self-aware?

I think self-awareness might arise as the result of information looping back and forth between the conscious and unconscious mind. The conscious mind might output some or all available information about its own state to the unconscious mind. As we previously covered, I believe the conscious mind could be sending things like high-level commands back down to the unconscious mind, but this information transfer might also include some of the details of its own inner workings.

The unconscious mind could then take this information and process it the same way it processes all sensory information: by projecting it into a qualia hologram. Then, when the qualia hologram gets passed up to the conscious mind, the mind would have the ability to inspect itself in the information contained within.

In a sense, this would enable our consciousness to perceive itself like a reflection in a mirror. This self-knowledge could then be used to influence future decision-making, which would then be reflected in the next qualia hologram, ad infinitum.

I wouldn’t say I have strong evidence that this is what is happening, but I do have an observation to share that I think is interesting. In the 80s, Libet’s experiment found that neural activity related to an action precedes our conscious awareness of it. In these experiments, test subjects were asked to move their finger, and to note the exact moment they became aware of their decision to carry out that action.

This is the clock Libet’s participants used to mark the moment they became consciously aware of moving their fingers.

He found that neural activity related to the finger motion began to rise 200ms before conscious awareness of it. Many people used this as evidence that free will is an illusion and that our actions are made unconsciously without any input from our “conscious will”.

I think I might be able to make sense of what is happening here. As Wittgenstein observed, we cannot know the result of a mental calculation before it is complete. Otherwise, we would not have to perform the calculation to get the result.

Our consciousness is a process for calculating what our next thought will be. So, in a similar way, we cannot know the result of that calculation until it is completed either. But where does the conscious mind’s self-knowledge come from? If it comes from its own reflection in the qualia hologram generated by the unconscious mind, then our conscious mind cannot know what it has already thought until that thought is reflected back to it from the unconscious mind.

In other words, when I hear myself thinking in my head, what I am hearing is not the direct result of my conscious thoughts themselves. What I am hearing is the echo of my thoughts bouncing off of my unconscious mind.

Libet noted that, although neurological activity preceded conscious awareness, there was still a short window of time in which the conscious mind could veto the action to prevent it from happening. I think what’s happening here is that the conscious mind makes a decision to move its finger and sends down a message to the unconscious mind to carry it out. The next time the qualia hologram is generated, the conscious mind becomes aware of its decision and can reflect on it. If it immediately decides to not move a finger after all, it can send that updated command back down to the unconscious mind to cancel it before it can be carried out.

This looping process of self-awareness could potentially be understood as what cognitive scientist Douglas Hofstadter describes as a “strange loop”. A strange loop occurs when we unexpectedly find ourselves back at the starting point while moving through the levels of some hierarchical system. In our case, the conscious mind sends information to the unconscious mind, which is then reflected in the qualia hologram and sent back up to the conscious mind for further reflection and modification. This looping back onto itself, this self-referential recursion of our consciousness, forms a strange loop leading to self-awareness.

Homo ex Machina

We now have nearly all of the pieces necessary to explain how and why consciousness arises. I postulate that the machinery of our minds uses embedding spaces to create a representation of reality that includes us experiencing reality. Our conscious mind itself is a type of Turing machine that processes our experiences by performing operations on embeddings, and our self-awareness results from the conscious mind seeing its reflection in our internal world model.

It does not matter to us that this experience is merely the result of electrical signals, chemical reactions, and so on. It is very real to us. All we have to go on is our own sensory information. Our senses are our entire internal universe in a sense, and so our subjective experience is completely real to us. The details of what the underlying machinery is doing do not make a difference for our subjective experience because we cannot directly experience it.

This has some extremely unintuitive implications. If our experiences are purely an informational process, then the medium for processing this information may indeed be utterly irrelevant. I have grappled with this dilemma for a long time and all of my previous attempts to explain consciousness and qualia as computational processes have run aground when I reached this test in particular.

This comic strip from XKCD presents a thought experiment, wherein an entire universe can be simulated through the act of manipulating stones in a desert. What the author says about the equivalence of running a computer using electricity and running a computer with rocks is true. Turing machines will behave the same way whether they are made of rocks, silicon, or meat.

Part of the punchline is that a rock computer could, in principle at least, be simulating the entire universe, including our subjective experience of it. This has always seemed preposterous on its face, but I have never been able to find a reason why this would not be the case if we assume that the evolution of the universe is indeed computable.

Now with a fresh perspective on this dilemma, I feel at least slightly less perturbed than before. I think a consciousness simulated on a rock computer would actually be conscious and have subjective experiences. This still sounds ludicrous, but now I think I can see in what sense this would actually be true. A simulated experience is real because its informational underpinnings are real.

What does this mean? For starters, all of this theorizing rests on the unproven premise that consciousness is computable. If it turns out that consciousness makes use of a non-computable process, then this all goes out the window. However, if consciousness truly is computable, it can be simulated. If it can be simulated, a simulated person would truly experience it from their perspective.

Unfeeling, unthinking matter then would in fact be capable of giving rise to the rich internal experiences of our daily lives. Our neuronal substrate might be as insensate as any other computer, but the software running on it would actually experience qualia. Our consciousness then, is a delicate and ephemeral thing of pure information, dancing like lightning over the folds of our brains, wholly dependent on but wholly separate from the underlying computational machinery.

wow, controversial post! I really like it as an intro. It’s kind of detailed for me, but I might send it to someone if they’re new to the topic.