Parameter Scaling Comes for RL, Maybe

TLDR

Unlike language models or image classifiers, past reinforcement learning models did not reliably get better as they got bigger. Two DeepMind RL papers published in January 2023 nevertheless show that with the right techniques, scaling up RL model parameters can increase both total reward and sample-efficiency of RL agents—and by a lot. Return-to-scale has been key for rendering language models powerful and economically valuable; it might also be key for RL, although many important questions remain unanswered.

Intro

Reinforcement learning models often have very few parameters compared to language and image models.

The Vision Transformer has 2 billion parameters. GPT-3 has 175 billion. The slimmer Chinchilla, trained in accord with scaling laws emphasizing bigger datasets, has 70 billion.

By contrast, until a month ago, the largest mostly-RL models I knew of were the agents for Starcraft and Dota2, AlphaStar and OpenAI5, which had 139 million and 158 million parameters. And most RL models are far smaller, coming in well under 50 million parameters.

The reason RL hasn’t scaled up the size of its models is simple—doing so generally hasn’t made them better.

Increasing model size in RL can even hurt performance. MuZero Reanalyze gets worse on some tasks as you scale network size. So does a vanilla SAC agent.

There has been good evidence for scaling model size in somewhat… non-central examples of RL. For instance, offline RL agents trained from expert examples, such as DeepMind’s 1.2-billion parameter Gato or Multi-Game Decision Transformers, clearly get better with scale. Similarly, RL from human feedback on language models generally shows that larger LM’s are better. Hybrid systems such as PaLM SayCan benefit from larger language models. But all these cases sidestep problems central to RL—they have no need to balance exploration and exploitation in seeking reward.

In the typical RL setting—there has generally been little scaling and little evidence for the efficacy of scaling. (Although there has not been no evidence.)

None of the above means that the compute spent on RL models is small or that compute scaling does nothing for them. AlphaStar used only a little less compute than GPT-3, and AlphaGo Zero used more, because both of them trained on an enormous number of games. Additional compute predictably improves performance of RL agents. But, rather than getting a bigger brain, almost all RL algorithms spend this compute by (1) training on an enormous number of games (2) or (if concerned with sample-efficiency) by revisiting the games that they’ve played an enormous number of times.

So for a while RL has lacked:

(1) The ability to scale up model size to reliably improve performance.

(2) (Even supposing the above were around) Any theory like the language-model scaling laws which would let you figure out how to allocate compute between model size / longer training.

My intuition is that the lack of (1), and to a lesser degree the lack of (2), is evidence that no one has stumbled on the “right way” to do RL or RL-like problems. It’s like language modeling when it only had LSTMS and no Transformers, before the frighteningly straight lines in log-log charts appeared.

In the last month, though, two RL papers came out with interesting scaling charts, each showing strong gains to parameter scaling. Both were (somewhat unsurprisingly) from DeepMind. This is the kind of thing that leads me to think “Huh, this might be an important link in the chain that brings about AGI.”

The first paper is “Mastering Diverse Domains Through World Models”, which names its agent DreamerV3. The second is “Human-Timescale Adaptation in an Open-Ended Task Space”, which names its agent Adaptive Agent, or AdA.

Note that both papers are the product of relatively long-running research projects.

The first is more cursory in its treatment of scaling. Which is not a criticism—the first has 4 authors and the latter more than 20; it looks like the latter also used much more compute; and certainly neither are pure scaling papers, although both mention scaling in the abstract. Even so, the first paper was enough that I was already thinking about its implications for scaling when the second came out, which is why I’m mashing them both together into a single big summary.

So: I’m going to give a short and lossily-compressed explanation of each paper and of general non-scaling results; these explanations will be very brief, and unavoidably ignore cool, intriguing, and significant things. And then I’m going to turn to what they tell us about RL scaling.

DreamerV3: “Mastering Diverse Domains through World Models”

As the name indicates, DreamerV3 is the 3rd version of the Dreamer model-based reinforcement learning algorithm, which started with V1 in 2019 and continued with V2 in 2020. A stated central goal of the paper is to push RL towards working on a wide range of tasks without task-specific tuning—that is, to make it work robustly.

This matters because RL is notoriously finicky and hard to get working, such that it can take weeks for even an experienced ML practitioner to get a simple RL algo working. A RL algorithm which “just works” in the way a transformer (mostly) “just works” would be a big deal.

How does Dreamer V3 Work?

DreamerV3, like V2 and V1, consists of three neural networks: the world model, the critic / state evaluator, and the actor / policy. Each of these networks stands in pretty much the same relation to each other across all three papers.

1. The World Model: The world model lets Dreamer think of the current state, as abstracted from current and past observations; and also lets it imagine future states contingent upon different actions. It’s learned via reconstructive loss from the observations and reward—the state at time t must hold information which helps inform you of the observations and rewards at time t, and so on through t + 1.

The above paragraph simplifies the story excessively. The model has both stochastic and deterministic elements to it; v2 added a discrete element to the continous representations. V3 adds several things on top of that. For instance, at different points it maps rewards and observations into a synthetic log-space, symmetric between negative and positive values, so it can handle extreme values better.

Note that the world model has ~ 90% of the total parameters in the agent.

2. The Critic: The critic returns how good a particular state is relative the agent’s current policy. That is, it returns an estimate of how much total time-discounted reward the model expects from that state.

It’s learned via consistency with itself and the world model through standard Bellman equations. (It is learned in a manner at least somewhat similar to how EfficientZero learns to estimate value.)

3. The Actor: The actor is what actually maps from states to a distribution of actions aimed at maximizing reward—it’s the part that actually does things. The actor is trained simply by propagating gradients of the value estimates back through the dynamics of the world model to maximize future value.

That last point is key to all of the Dreamer papers, so let me contrast it with something entirely different. MuZero-like agents train the actor by conducting a tree search over different actions leading to different possible future states. In such a search, the world model lets you imagine different future states, while the value function / critic lets you evaluate how good they are; you can then adjust the actor to agree with the tree search about which action is the best.

By contrast, Dreamer conducts no tree search. Every future imagined state is a result of the world model, and the value of that future imagined state is a result of the critic, and which future imagined state occurs is a result of the actor—and importantly every one of these elements is differentiable. So you can use analytically calculated gradients through the world model to update the actor in the direction of maximum future value. See the google blogpost on the original Dreamer for more introductory information.

Results

The DreamerV3 paper (p19) says that its hyperparameters were tuned on 2 benchmarks, and then deployed the other benchmarks without modification to verify their generality. The algorithm gets a state-of-the-art score on 3 of these new benchmarks out-of-the-box, as well as good scores on the others. This is quite robust for an RL agent.

Note that these environments include:

-

The Atari 100k testbed, where EfficientZero still holds the state of the art. Nevertheless, DreamerV3 beats every other paper but E0 at this benchmark—while, the paper points out, using < 50% as many GPU-days as EfficientZero.

-

The Crafter benchmark, an 2d tile-based open world survival game that involves foraging for food, avoiding monsters, and general long term planning. DreamerV3 gets state-of-the-art on this. (Video not of DreamerV3.)

-

Behaviour Suite for Reinforcement Learning, a collection of small environments designed to test generalization, exploration, and long-term credit assignment in a variety of contexts. DreamerV3 gets state-of-the-art on this.

-

DMLab, a first-person game of 3d navigation and planning.

Minecraft, where DreamerV3 is the first agent to make a diamond from scratch, without using the literal years of pretraining that OpenAI’s VPT (2022) used to accomplish the same task. (Although, notably, they did increase the speed at which blocks break.)

Overall, this seems like an extremely robust agent, per what the paper promises.

Scaling

As noted in the introduction, RL has generally scaled in the past through training on more data or through revisiting old episodes.

DreamerV3 also can scale through revisiting episodes. The following chart shows agent reward against number of episode steps; the “training ratio” is the ratio of the number of times the algorithm revisits each step in memory over each actual step in the environment. Note the logarithmic x-axis:

Algorithms need to be robust to off-policy data in order to benefit from training on old data from when the algorithm was stupider. This is excellent behavior, but not particularly novel; you can find similar ratios of training in other works.

But DreamerV3 can also scale by increasing model size. In the following, the smallest model has 8 million parameters—the largest has 200 million. Note the non-logarithmic and differently-sized x-axis, relative to the other image:

You can see that larger models both learn faster and asymptote at higher levels of reward, which is significant.

Sadly, this is the only parameter scaling chart in the paper. This makes sense; the paper alludes to lack of compute, and is quite focused on developing an algorithm that researchers can use easily, which means throwing moon-sized supercomputers at ablation studies is rather out-of-scope.

But it leaves you with so many questions! Are Breakout and MsPacman typical, or do other Atari environments scale better or worse? It looks like parameter scaling is more important / works better on MsPacman than in Breakout; I’d guess MsPacman is more complex than Breakout, so is parameter scaling more important for complex environments in general?

It looks (?!?) like parameter scaling lets MsPacman reach absolute levels of performance that are impossible through training ratio scaling alone, but the y-axes in the charts are scaled differently and it’s hard for me to say. And, granted that both revisiting episodes many times and scaling model parameters improves performance, which is the most efficient way to spend compute?

You can know that parameter scaling in DreamerV3 works, and works pretty well, but not much past that.

Adaptive Agent: “Human-Timescale Adaptation in an Open-Ended Task Space”

This research project trains agents that can accomplish previously-unseen tasks after adapting to them through a handful of trials—thus, learning in the same timescale as a human. The explicit goal is to work towards an “RL foundation model”, which can quickly adapt to a previously unseen RL task just like GPT-3 can quickly adapt to an previously unseen text task without changing its weights. So, like GPT-3, a transformer forms the backbone of the model.

How Does Adaptive Agent Work?

The environment / world of tasks here is just as much a part of the project as the agent, unlike for DreamerV3, so we should start there.

The Environment

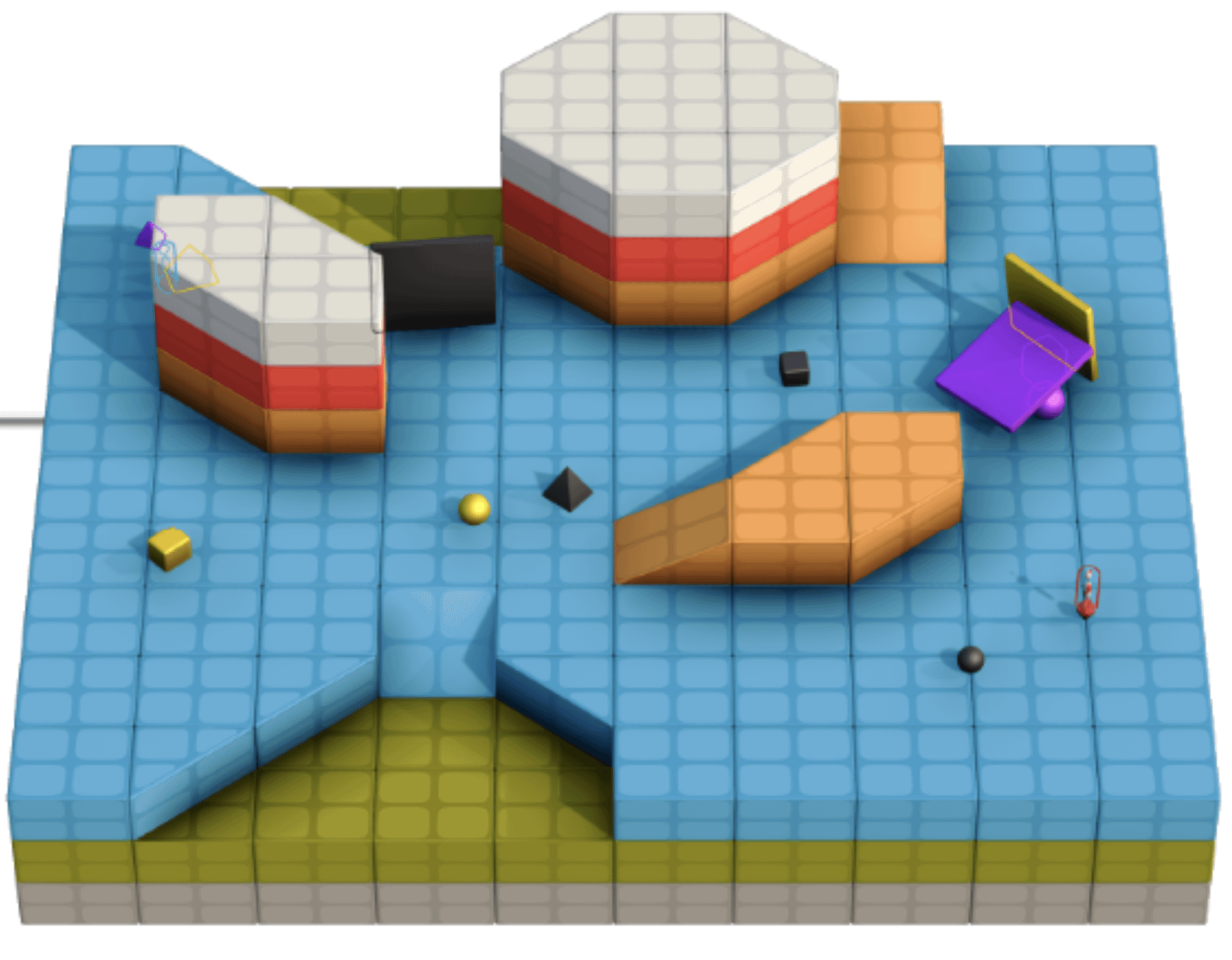

Like DeepMind’s previous work on open-ended learning, this paper uses a Unity-based 3d environment called XLand, modified and dubbed XLand 2.0 for this project.

Like XLand 1.0, the environment’s topography can range from flat to many-layered. Rigid, moveable bodies—spheres, cubes, pyramids, flat surfaces—of different sizes and colors appear across the environment. Agents placed in the environment can hold or push these objects. Agents can have goals in the environment that range from simple (“hold the black cube”) to more complex (“make no yellow cubes be near black spheres”).

New to XLand 2.0 are the “production rules”, a kind of basic virtual chemistry. Each production rule defines a condition and a list of spawns. The condition is a predicate like black sphere near purple cube; if the condition becomes satisfied, then the items dictated by spawns appear in the place of the original objects. Each game can have multiple production rules. Using some production rules can be necessary for obtaining the game-specific goal; using other rules might make obtaining the goal impossible by destroying necessary objects. Although these production rules may be visible to the agent, they also may be be partially or entirely hidden, requiring the agent to experiment to determine what they are.

To get a good idea of what the environments and the agent are like, I’d recommend taking a look at the fully-trained agent playing in several different environments. Here is handful I found interesting—note that all of the following are human-designed examples being played by the fully-trained agent, which has never seen any of them before.

-

Object Permanence—The agent has to remember the right path to an object although the path to the object takes it out of sight.

-

Antimatter—That goal of the agent is to make it so no black spheres are in line-of-sight of yellow spheres; a hidden rule destroys yellow and black spheres if they ever touch.

-

Push, Don’t Lift—Two objects disappear if the agent lifts them, so it has to try to push them together.

With all the variety of topographies, objects, rules, and goals, there is an enormous variety of possible games the agent can play. With such an infinite variety of tasks, you can create tasks of essentially arbitrary levels of difficulty. While training the agent, by testing prospective training tasks against the current capabilities of the agent, the system can selects training tasks which are hard, but not impossibly hard.

So the agent is trained against an ascending curriculum of ever-more-difficult tasks—the open-ended learning paradigm.

Meta RL

If you’ve watched the above videos, you’ll have noticed that while training / evaluating, the agent goes through a series of episodes each consisting of several trials.

Each episode takes place in the same environment, with the same goals. Each trial lasts a fixed amount of time (per trial); afterwards the environment state is entirely reset, but the agent’s transformer-based memory is not reset. This allows the agent at test time to adapt its policy from what it has learned, without any further updates to its weights.

The specific algorithm the agent uses is Muesli, a MuZero-inspired variant of Maximum a Posteriori Policy optimization (MPO). Muesli’s paper says that it was engineered to handle partial observability, stochastic policies, and many different environments.

As mentioned, the agent has a transformer-based memory over previous timesteps, on top of which are a policy head (which predicts the best action) and a value head (which predicts how good the current state is).

Finally, note that agent training involves teacher-student distillation.

That is, they first train a small agent on a variety of tasks for a short time. This agent will be the teacher. After training the teacher, they start to train a student agent in the environment—but in addition to learning from the environment, the student agent is trained to imitate what the teacher agent would do in the situations it encounters. Thus, the process distills knowledge from the first agent into the second. This process dependably increases the speed at which the second agent learns and, more to the point, also increases the asymptotic score of the second agent.

Results

Some testing is performed on a held-out set of 1000 tasks from the training distribution which the model never saw during training. On this set, the model gets > 0.8 of the possible score on 40% of the tasks zero shot. But after 13 trials it gets 0.8 of the possible score on 72% of the tasks, confirming that it can learn about tasks quickly.

They also train on 30 held-out manually constructed test environments. I’ve already linked to several above but you can see more. Generally, AdA learns at least as fast as humans, although humans can succeed at a small number of tasks that AdA does not learn, such as a task which requires you to move one object with a different object.

All of the above is about the single-agent setting; they also train models to adapt on the fly in the multi-agent setting, with similar good results. Agents often learn to cooperate and divide labor.

Scaling

This paper has more and more informative RL scaling charts than any prior RL paper I’ve read.

First, to get the basics out of the way, increasing the number of parameters in the model improves the behavior of the agent. In the following they scale up to a model with 533 million parameters total—with 265 million in the Transformer part of the model, which is what they put on their charts, which is somewhat confusing. The intro says that this is the largest RL model trained from scratch of which the authors are aware, and yeah, I’m not gonna disagree with them.

The following chart shows the number of transformer parameters in the model against the median test score (on the left) and the 20th percentile from the bottom test score (on the right). (For all of the following, the authors show score on the median task and the 20th-percentile-from-the-bottom-task because one of their goals is to make agents which are robust; broad competence on a variety of tasks is desired, which means doing ok on many things is generally more important than doing really well on a handful.)

Note that x and y axis are log-scaled. The colors indicate the number of trials, with red as 1 and purple as 13.

You can see that model size increases performance, but mostly in the context of multiple trials. For just one trial, scaling from 6m to 265m parameters bumps the median score from 0.49 to something like 0.65. But for all 13 trials, scaling the same amount bumps median score from 0.77 to 0.95. Larger models also help particularly at the very difficult tasks, as indicated by the 20th percentile lines.

Granted that performance increases as model size increases, what is the most efficient way to spend compute? The above chart is for agents trained for the same amount of time—which means that the larger agents have used far more compute. What if instead, we train agents for the same number of floating-point-operations?

They have charts for that, but the results are a little ambiguous.

Put briefly; for total FLOPs expended a mid-size model seems to be the best. Very small models do poorly, and very large models do poorly, but in the middle at around 57 million transformer-parameters you seem to find a happy medium.

On the other hand “due to poor optimization” (p52), they say that part of the agent increases in compute expense with size at an excessively high rate. If you exclude this apparently mis-optimized part of the model, and instead of looking at total flops look at total FLOPs per learner, you find that even the largest model size is still improving over those smaller than it in compute efficiency, and there would be benefit to scaling up the model yet further past half a billion parameters.

So it is unclear what the ideal model size / training length ratio looks like, even just on this environment.

Stepping away from ideal model sizes—the paper also looks into the conditions required for large models to be beneficial at all. Specifically, at least with this kind of agent in this kind of environment, they show that large models are not nearly as beneficial—or do nothing—without:

-

Complex Environments: The authors find evidence that scaling only works well in sufficiently complex environments. They test for this by training many differently-sized agents on games that only occurred in a flat room without topographic complexity—in a really simple environment. Those which are trained in the flat room start to get worse as they scale up, while agents trained in the full spectrum of complex environments continue to simply get better as they have more parameters.

-

Distillation: If you train a 23 million-parameter model from scratch without teacher-student distillation, it still works, although not as well as with distillation. On the other hand, if you train a 265 million-parameter model from scratch without distillation, it learns essentially nothing (p17). (With distillation, the larger model works better, as I mentioned.) So distillation can also be a prerequisite for using a very large model.

-

A Large Number of Games: The authors trained 28 million / 75 million parameter models while sampling from a pool of either 200 million or 25 billion tasks. In all cases here, the larger model does better, and the models trained on a larger pool of tasks do better—but the larger model does better by a greater amount than the smaller in the case of the 25 billion tasks. (The effect doesn’t seem as pronounced as the other two cases, though.)

Conclusion

Other papers have also tried scaling up RL recently.

For instance, one very recent paper claims that RL agents like AlphaZero are vastly underparameterized. Other recent papers have tried to scale up RL models with other techniques.

I think these two DeepMind papers provide the strongest evidence yet for the applicability of parameter-scaling RL, because they train with > 150 million parameters, and because of the number of environments (DreamerV3) or breadth of the environment (AdA) in which they train.

This might be the distant rumble from a potential shift. Transformer-based LLMs let you turn capital into language model performance, via engineering payroll and purchases from NVIDIA. If RL parameter scaling works generally, and works notably better than training longer, then you could turn capital into RL model performance via the same route that LLMs take. If RL performance can become economically useful, this makes RL scaling very likely.

Many things are still uncertain, of course:

-

What kind of laws govern ideal RL model size? How would you even go about determining environment-independent rules?

-

AdA indicates that you need sufficient environmental complexity for scaling to work—but parameter scaling with DreamerV3 may (?) work regardless of environmental complexity. This is likely because the world model in DreamerV3 takes up the majority of its parameters. But is this true?

-

We already knew that DreamerV2 could train a robot to walk in an hour, but RL still seems to little application in industrial robots. Would scaling unlock further RL sample-efficiency such that new robotic applications become economically viable?

-

The AdA paper is quite complex, rests heavily on prior DeepMind work, and likely rests on internal-to-DeepMind expertise. How many teams outside of DeepMind would even be able to reproduce something like it to take advantage of its scaling laws?

-

From GPT-2 (proof of scaling) to GPT-3 (scale) took a bit over a year. Is any of the above a proof of scaling of RL or not?

My tentative guess is that growth in RL will still be “slow” compared to LLMs growth and RLHF with LLMs. Even so—for me, reading these papers is definitely an update in the direction of more useful RL earlier, regardless of the absolute values attached.

My impression from the DreamerV3 paper was actually that I expect it to be less easy to generalize than EfficentZero in many ways, because it has clever hacks like symlog on rewards that are actually encoding programmer knowledge about the statistics of the environment. (The biggest such thing in EfficientZero is maybe the learning rate schedule? Or its value prediction scheme is pretty complicated, but didn’t seem fine-tuned. But in the DreamerV3 paper there’s quite a few pieces that seemed carefully engineered to me.)

On the other hand, there are convincing arguments for why eventually, scaling and generalization should be better for a system that learns its own rules for prediction / amplification, rather than being locked into MCTS. But I feel like we’re not quite there yet—transformers are sort of like learning rules for convolution, but we might imagine that for RL we want to be able to learn even more complicated generalization processes. So currently I expect benefits of scale, but we can’t really take off to the moon (fortunately).

I’m quite unsure as well.

On one hand, I have the same feeling that it has a lot of weirdly specific, surely-not-universalizing optimizations when I look at it.

But on the other—it does seem to do quite well on different envs, and if this wasn’t hyper-parameter-tuned then that performance seems like the ultimate arbiter. And I don’t trust my intuitions about what qualifies as robust engineering v. non-robust tweaks in this domain. (Supervised learning is easier than RL in many ways, but LR warm-up still seems like a weird hack to me, even though it’s vital for a whole bunch of standard Transformer architectures and I know there are explanations for why it works.)

Similarly—I dunno, human perceptions generally map to something like log-space, so maybe symlog on rewards (and on observations (?!)) makes deep sense? And maybe you need something like the gradient clipping and KL balancing to handle the not-iid data of RL? I might just stare at the paper for longer.

See section 5.3 “Reinforcement Learning” of https://arxiv.org/abs/2210.14891 for more RL scaling laws with number of model parameters on the x-axis (and also RL scaling laws with the amount of compute used for training on the x-axis and RL scaling laws with training dataset size on the x-axis).