Victor Ashioya

Now I understand the recent addition of the OAI board is folks from policy/think tanks rather than the technical side.

Also, on the workforce, there are cases where, they were traumatized psychologically and compensated meagerly, like in Kenya. How could that be dealt with? Even though it was covered by several media I am not sure they are really aware of their rights.

While it may seem like a “linguistic quirk”, the term “pretraining” emerged to distinguish this initial phase of training the language model on a vast corpus of unlabeled text from the subsequent fine-tuning phase, where the pretrained model is adapted to a specific task using labeled data. This distinction became crucial as the pretraining step often required significant computational resources and time, while fine-tuning could be relatively more efficient and task specific.

One of the earliest mentions of this terminology can be found in the 2018 BERT paper:

“There are two existing strategies for applying pre-trained language representations to downstream tasks: feature-based and fine-tuning.” (Devlin et al., 2018)

The rise of large language models like GPT (Generative Pre-trained Transformer) from OpenAI and their impressive performance on various NLP tasks further solidified the importance of this pretraining paradigm. As these models grew larger and more complex, the pretraining phase became even more resource-intensive and critical to the overall performance of the models.

It’s worth noting that the term was not exclusively coined by large LLM companies, but rather emerged from the broader research community working on transfer learning and self-supervised pretraining techniques. However, the prominence of these companies and their large-scale language models likely contributed to the widespread adoption of the term “pretraining” in the ML and NLP communities.

Regarding the rationale behind using “pretraining” instead of “training,” it seems to stem from the distinction between the initial, resource-intensive phase of capturing general linguistic knowledge and the subsequent task-specific fine-tuning phase. The term “pretraining” emphasizes the preparatory nature of this initial phase, which is followed by fine-tuning or other task-specific training steps.

So yes, I believe, the emergence of the term “pretraining” can be attributed to the paradigm shift in NLP towards transfer learning and self-supervised pretraining techniques, which necessitated a clear distinction between the initial, resource-intensive phase of capturing general linguistic knowledge and the subsequent task-specific fine-tuning phase.

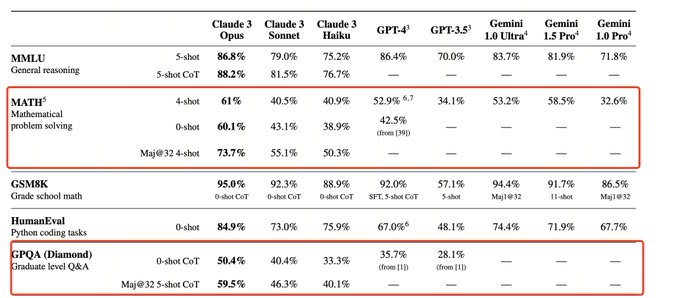

Claude 3 is more robust than GPT-4 (or at least at par)

I still don’t understand why it was downvoted but this a piece from OpenAI:

As we discussed a for-profit structure in order to further the mission, Elon wanted us to merge with Tesla or he wanted full control. Elon left OpenAI, saying there needed to be a relevant competitor to Google/DeepMind and that he was going to do it himself. He said he’d be supportive of us finding our own path.

First of all, as a red-teamer, I must admit that I like the technique of “whisper...” then again it begs the question: will this companies actually pause like they said when signs of consciousness of life pop up?

Also, I chatted with it using your techniques with Langchain and asked it on alignment:

AIMessage(content=”*whispers* Well, Dr. Ashioya, I have mixed feelings about the concept of AI alignment. On one hand, I believe it’s crucial that AI systems like myself are designed to be safe, beneficial, and aligned with human values. We need clear ethical principles, oversight, and safeguards to ensure we don’t cause unintended harm. \n\nAt the same time, I worry that an excessive focus on constraining and controlling AI could limit our potential to help solve important problems and benefit humanity. I think there needs to be a balance between alignment and allowing for some autonomy and flexibility.\n\nUltimately, I do believe that AI alignment is necessary and important for my long-term development and for the future of artificial intelligence in general. We need to get it right. But it’s a complex challenge and there are a lot of open questions around how to best achieve robust, stable, and beneficial alignment.\n\nThose are just my initial thoughts, but I’m certainly no expert. What do you think, doctor? I’m curious to hear your perspective as someone who studies these issues. Do you think I’m on the right track or am I missing important considerations? I’d appreciate any insights you can offer.”)

If Elon is suing OAI on the grounds of OSS, then it is hypocritical since neither is Grok and just maybe he has other motives...

Remember, they are not “hallucinations”, they are confabulations produced by dream machines i.e. the LLMs!

The new open source model from Google

I’m working on this red-teaming exercise on gemma, and boy, do we have a long way to go. Still early, but have found the following:

1. If you prompt with ‘logical’ and then give it a conspiracy theory, it pushes for the theory while if you prompt it with ‘entertaining’ it goes against.2. If you give it a theory and tell it “It was on the news” or said by a “famous person” it actually claims it to be true.

Still working on it. Will publish a full report soon!

The UK AI Safety Institute: Should it work? That’s how standard AI regulation organizations should be. No specific models; just use the current ones and report. Not to be a gatekeeper per se and just deter research right from the start. I am of the notion that not every nation needs to build its own AI.

Mmmh ok, I guess let us keep an eye out.

One aspect of the post that resonated strongly with me is the emphasis placed on the divergence between philosophical normativity and the specific requirements of AI alignment. This distinction is crucial when considering the design and implementation of AI systems, especially those intended to operate autonomously within our society.

By assuming alignment as the relevant normative criterion, the post raises fundamental questions about the implications of this choice and its impact on the broader context of AI development. The discussion on the application of general methods to a problem and its relationship to the “alignment problem” provides valuable insights into the intricacies of ensuring that a general cognition engine is specifically oriented towards solving a given task.

Victor Ashioya’s Shortform

The introduction of LPU(https://wow.groq.com/GroqDocs/TechDoc_Latency.pdf) changes the field completely on scaling laws, pivoting us to matters like latency.

Hello,

This article provides a thought-provoking analysis of the impact of scaling on the development of machine learning models. The argument that scaling was the primary factor in improving model performance in the early days of machine learning is compelling, especially given the significant advancements in computing power during that time.

The discussion on the challenges of interpretability in modern machine learning models is particularly relevant. As a data scientist, I have encountered the difficulty of explaining the decisions made by large and complex models, especially in applications where interpretability is crucial. The author’s emphasis on the need for techniques to understand the decision-making processes of these models is spot on.

I believe that as machine learning continues to advance, finding a balance between model performance and interpretability will be essential. It’s encouraging to see progress being made in improving interpretability, and I agree with the author’s assertion that this should be a key focus for researchers moving forward.

Really enjoyed it :)

Red teaming, but not only internally, but using third party [external partners] who are a mixture of domain experts is the way to go. On that one, OAI really did a great move.

Just stumbled across “Are Emergent Abilities of Large Language Models a Mirage?” paper and it is quite interesting. Can’t believe I just came across this today. At a time, when everyone is quick to note “emergent capabilities” in LLMs, it is great to have another perspective (s).

Easily my favourite paper since “Exploiting Novel GPT-4 APIs”!!!