Is the LSRDR a proposed alternative to NN’s in general?

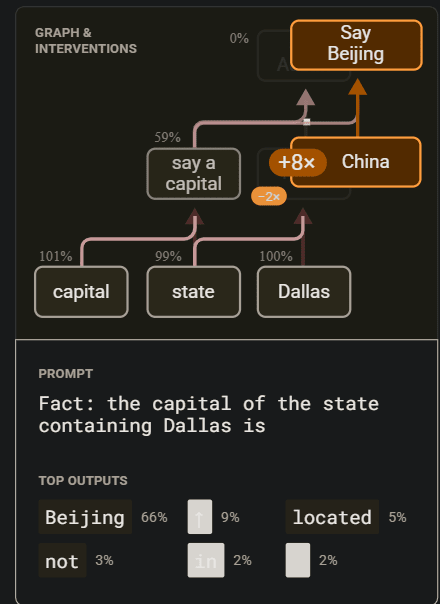

What interpretability do you gain from it?

Could you show a comparison between a transformer embedding and your method with both performance and interpretability? Even MNIST would be useful.

Also, I found it very difficult to understand your post (Eg you didn’t explain your acronym! I had to infer it). You can use the “request feedback” feature on LW in the future; they typically give feedback quite quickly.

That does clarify a lot of things for me, thanks!

Looking at your posts, there’s no hooks or trying to sell your work, which is a shame cause LSRDR’s seem useful. Since they are you useful, you should be able to show it.

For example, you trained an LSRDR for text embedding, which you could show at the beginning of the post. Then showing the cool properties of pseudo-determinism & lack of noise compared to NN’s. THEN all the maths. So the math folks know if the post is worth their time, and the non-math folks can upvote and share with their mathy friends.

I am assuming that you care about [engagement, useful feedback, connections to other work, possible collaborators] here. If not, then sorry for the unwanted advice!

I’m still a little fuzzy on your work, but possible related papers that come to mind are on tensor networks.

Compositionality Unlocks Deep Interpretable Models—they efficiently train tensor networks on [harder MNIST], showing approximately equivalent loss to NN’s, and show the inherent interpretability in their model.

Tensorization is [Cool essentially] - https://arxiv.org/pdf/2505.20132 - mostly a position and theoretical paper arguing why tensorization is great and what limitations.

Im pretty sure both sets of authors here read LW as well.