It seems easier to fit an analogy to an image than to generate an image starting with a precise analogy (meaning a detailed prompt).

Maybe because an image is in a higher-dimensional space and you’re projecting onto the lower-dimensional space of words.

(take this analogy loosely idk any linear algebra)Claude: “It’s similar to how it’s easier to recognize a face than to draw one from memory!”

(what led to this:)

I’ve been trying to create visual analogies of concepts/ideas, in order to compress them and I noticed how hard it is. It’s hard to describe images, and image generators have trouble sticking to descriptions (unless you pay a lot per image).

Then I started instead, to just caption existing images, to create sensible analogies. I found this way easier to do, and it gives good enough representations of the ideas.

(some people’s solution to this is “just train a bigger model” and I’d say “lol”)

(Note, images are high-dimensional not in terms of pixels but in concept space.

You have color, relationships of shared/contrasting colors between the objects (and replace “color” with any other trait that imply relationships among objects), the way things are positioned, lighting, textures, etc. etc.

Sure you can treat them all as configurations of pixel space but I just don’t think this is a correct framing (too low of an abstraction))

(specific example that triggered this idea and this post:)

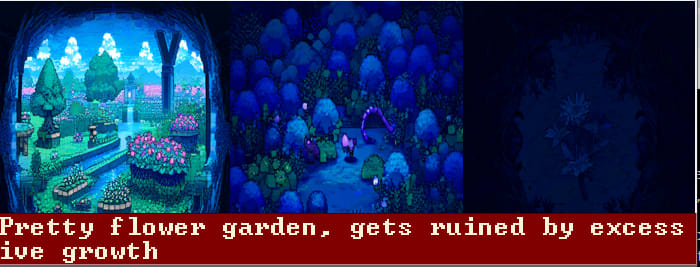

pictured: “algorithmic carcinogenesis” idea, talked about in this post https://www.conjecture.dev/research/conjecture-a-roadmap-for-cognitive-software-and-a-humanist-future-of-ai

(generated with NovelAI)

I was initially trying to do more precise and detailed analogies, like, sloppy computers/digital things spreading too fast like cancer, killing and overcrowding existing beauty, where the existing beauty is of the same “type”—meaning also computers/digital things—but just more organized or less “cancerous” (somehow). It wasn’t working.

Editability and findability --> higher quality over time

Editability

Code being easier to find and easier to edit, for example,

makes it more likely to be edited, more subject to feedback loop dynamics.

Same applies to writing, or anything where you have connected objects that influence each other, where the “influencer node” is editable and visible.

(not sure if the last one is a good example)

Imagine if when writing this Quick Take:tm:, I had a side panel that on every keystroke, pulled up related paragraphs from all my existing writings!

I can see past writings which cool, but I can edit them way more easily (assuming a “jump to” feature), in the long term this yields many more edits, and a more polished and readable total volume of work.

Findability

If you can easily see the contents of something and go, “wait this is dumb”. Then even if it’s “far away” like, you have to find it in the browser, do mouse clicks and scrolls, you’ll still do it.

What in fact determined you editing it, is that the threshold for loading its contents into your mind, had been lowered. When you load it, the opinion is instantly triggered.