QAPR 4: Inductive biases

Introduction

This is week 4 of Quintin’s Alignment Papers Roundup. The current focus is the inductive biases of stochastic gradient descent.

For most datasets and labels, there are many possible models that reach good performance. “Inductive biases” refers to the various factors that incline a particular training process to find some types of models over others. When the data under-specify the learned model, a training process’s inductive biases determine what sort of decision making process the model implements, and how the model generalizes beyond its training data.

I’d intended to publish this last week, but it turns out that there’s a lot of work on SGD’s inductive biases, and it’s very technical. I kept finding new papers that seemed relevant. That’s why this roundup has 16 papers, in place of the usual ~9 or so.

Papers

Eigenspace Restructuring: a Principle of Space and Frequency in Neural Networks

Understanding the fundamental principles behind the massive success of neural networks is one of the most important open questions in deep learning. However, due to the highly complex nature of the problem, progress has been relatively slow. In this note, through the lens of infinite-width networks, a.k.a. neural kernels, we present one such principle resulting from hierarchical localities. It is well-known that the eigenstructure of infinite-width multilayer perceptrons (MLPs) depends solely on the concept frequency, which measures the order of interactions. We show that the topologies from deep convolutional networks (CNNs) restructure the associated eigenspaces into finer subspaces. In addition to frequency, the new structure also depends on the concept space, which measures the spatial distance among nonlinear interaction terms. The resulting fine-grained eigenstructure dramatically improves the network’s learnability, empowering them to simultaneously model a much richer class of interactions, including Long-Range-Low-Frequency interactions, Short-Range-High-Frequency interactions, and various interpolations and extrapolations in-between. Additionally, model scaling can improve the resolutions of interpolations and extrapolations and, therefore, the network’s learnability. Finally, we prove a sharp characterization of the generalization error for infinite-width CNNs of any depth in the high-dimensional setting. Two corollaries follow: (1) infinite-width deep CNNs can break the curse of dimensionality without losing their expressivity, and (2) scaling improves performance in both the finite and infinite data regimes.

My opinion:

The NTK lets us directly compute the inductive biases of a neural network near a particular point in parameter space. The NTK’s eigenfunctions give us possible behaviors, and its spectrum tells us how easy it is for the network to learn each eigenfunction.

This paper uses the NTK to compare the architectural inductive biases of convolutional networks to those of multilayer perceptrons. It seems like a promising approach for better understanding what sorts of behaviors different architectures are inclined to learn. However, this paper makes two major simplifying assumptions:

It assumes the infinite width limit, thereby ensuring the NTK remains constant through training (and also preventing any feature learning).

It assumes that input data is uniformly distributed across a manifold formed as a product of hyperspheres.

This paper’s discussion of inductive biases focuses a lot on the frequency biases of neural networks, rather than things more related to alignment. It is very hard to use the NTK (or any mathematical formalism) to talk about inductive biases towards or away from “intentional” / high-level concepts, such as values, deception, corrigibility, etc. However, it is much easier to evaluate how different NTKs bias the network towards learning functions of different frequencies, and so much discussion of NTK inductive biases focuses on frequency.

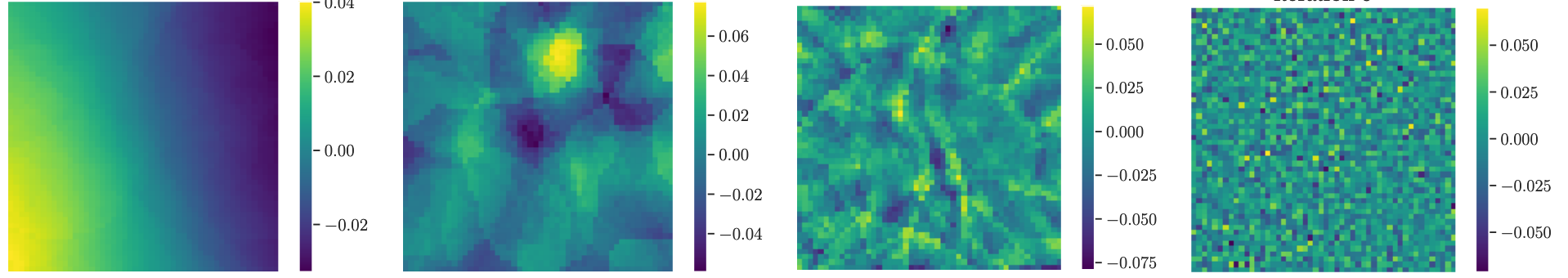

For a freshly initialized network, the NTK is going to give you a list of functions such as these:

and tell you how easily the network can learn each of them. It’s easy to rank these functions by frequency, but not so easy to rank them by how much learning them inclines the network towards liking geese.

Implicit Regularization via Neural Feature Alignment

We approach the problem of implicit regularization in deep learning from a geometrical viewpoint. We highlight a regularization effect induced by a dynamical alignment of the neural tangent features introduced by Jacot et al, along a small number of task-relevant directions. This can be interpreted as a combined mechanism of feature selection and compression. By extrapolating a new analysis of Rademacher complexity bounds for linear models, we motivate and study a heuristic complexity measure that captures this phenomenon, in terms of sequences of tangent kernel classes along optimization paths.

My opinion:

(see below)

What can linearized neural networks actually say about generalization?

For certain infinitely-wide neural networks, the neural tangent kernel (NTK) theory fully characterizes generalization, but for the networks used in practice, the empirical NTK only provides a rough first-order approximation. Still, a growing body of work keeps leveraging this approximation to successfully analyze important deep learning phenomena and design algorithms for new applications. In our work, we provide strong empirical evidence to determine the practical validity of such approximation by conducting a systematic comparison of the behavior of different neural networks and their linear approximations on different tasks. We show that the linear approximations can indeed rank the learning complexity of certain tasks for neural networks, even when they achieve very different performances. However, in contrast to what was previously reported, we discover that neural networks do not always perform better than their kernel approximations, and reveal that the performance gap heavily depends on architecture, dataset size and training task. We discover that networks overfit to these tasks mostly due to the evolution of their kernel during training, thus, revealing a new type of implicit bias.

My opinion:

A natural question to ask is how to extend approaches like Eigenspace Restructuring to track an architecture’s inductive biases across an entire trajectory of neural net optimization. Ideally, we’d have a theoretical model of how the NTK and its inductive biases change over the course of training, then “integrate” over the that trajectory to fully account for the functions learned over the training process.

The two papers above do not do this. Instead, they empirically investigate how the NTK changes over the course of network training, and how those changes impact our ability to predict training dynamics and generalization, finding that the NTK adapts over time to align with the labeling of the training data.

While not as useful as a theoretical model of how the NTK changes over training, these empirical results still seem alignment relevant. E.g., they imply that inductive biases can be learned from the training labels, which matches findings that humans become more shape biased as they grow up, and switch between shape or texture bias depending on what they’re looking at (e.g., being shape biased for animals, but texture biased for liquids / pastes). See section 1.1 of this dissertation.

Having context-sensitive inductive biases seems very useful if you want to quickly adapt to new information. Using different inductive biases for different (learned) object classes seems ~impossible to encode in an architecture or learning process, so I think it would have to be learned from the training data. Probably, many of the inductive biases of humans and AIs come from complex interactions between architecture, training process and data, and are far outside of the constant NTK limit.

We’ve also seen a similar result from the other direction: meta learning uses a two-level optimization setup, where the outer optimizer uses second-order gradients to learn an initialization that the inner optimizer can quickly adapt to downstream tasks. However, this paper found that the outer optimizer mostly just learns high-performance features directly.

Also, if SGD learns high-performance inductive biases for its training data, that could explain why explicit meta learning / self modifying training processes don’t seem to outperform simple SGD: gradient descent already self modifies to become better at learning the task at hand.

Tuning Frequency Bias in Neural Network Training with Nonuniform Data

Small generalization errors of over-parameterized neural networks (NNs) can be partially explained by the frequency biasing phenomenon, where gradient-based algorithms minimize the low-frequency misfit before reducing the high-frequency residuals. Using the Neural Tangent Kernel (NTK), one can provide a theoretically rigorous analysis for training where data are drawn from constant or piecewise-constant probability densities. Since most training data sets are not drawn from such distributions, we use the NTK model and a data-dependent quadrature rule to theoretically quantify the frequency biasing of NN training given fully nonuniform data. By replacing the loss function with a carefully selected Sobolev norm, we can further amplify, dampen, counterbalance, or reverse the intrinsic frequency biasing in NN training.

My opinion:

The previous two papers investigate how the NTK evolves while training finite width networks (when Eigenspace Restructuring’s assumption 1 is violated). This paper develops methods to apply NTK analysis to situations where data are not uniformly distributed (when Eigenspace Restructuring’s assumption 2 is violated). They find they can control the degree of frequency bias through the loss function, which further underscores how tightly intertwined a model’s inductive biases are with its training data.

On the Activation Function Dependence of the Spectral Bias of Neural Networks

Neural networks are universal function approximators which are known to generalize well despite being dramatically overparameterized. We study this phenomenon from the point of view of the spectral bias of neural networks. Our contributions are two-fold. First, we provide a theoretical explanation for the spectral bias of ReLU neural networks by leveraging connections with the theory of finite element methods. Second, based upon this theory we predict that switching the activation function to a piecewise linear B-spline, namely the Hat function, will remove this spectral bias, which we verify empirically in a variety of settings. Our empirical studies also show that neural networks with the Hat activation function are trained significantly faster using stochastic gradient descent and ADAM. Combined with previous work showing that the Hat activation function also improves generalization accuracy on image classification tasks, this indicates that using the Hat activation provides significant advantages over the ReLU on certain problems.

My opinion:

So, it turns out you can just remove the frequency bias of deep networks, and they will still work well for some tasks (or even perform better). I was surprised by this. My impression had been that the generalization capacity of neural networks would be more sensitive to their inductive biases than that.

I really wish the authors had tested their non-frequency-biased networks on a more realistic problem, ideally language modeling on the scale of BERT or larger (it’s not that expensive!). It’d be interested to see if we could find systematic differences in the generalization behaviors of language models trained with frequency bias versus language models trained without frequency bias.

I also wonder how closely the post-training inductive biases of the two types of models would line up. Can enough data “wash out” the differences in architectural inductive biases?

Spectral Bias in Practice: The Role of Function Frequency in Generalization

Despite their ability to represent highly expressive functions, deep learning models seem to find simple solutions that generalize surprisingly well. Spectral bias—the tendency of neural networks to prioritize learning low frequency functions—is one possible explanation for this phenomenon, but so far spectral bias has primarily been observed in theoretical models and simplified experiments. In this work, we propose methodologies for measuring spectral bias in modern image classification networks on CIFAR-10 and ImageNet. We find that these networks indeed exhibit spectral bias, and that interventions that improve test accuracy on CIFAR-10 tend to produce learned functions that have higher frequencies overall but lower frequencies in the vicinity of examples from each class. This trend holds across variation in training time, model architecture, number of training examples, data augmentation, and self-distillation. We also explore the connections between function frequency and image frequency and find that spectral bias is sensitive to the low frequencies prevalent in natural images. On ImageNet, we find that learned function frequency also varies with internal class diversity, with higher frequencies on more diverse classes. Our work enables measuring and ultimately influencing the spectral behavior of neural networks used for image classification, and is a step towards understanding why deep models generalize well.

My opinion:

This paper describes practical methods for evaluating the frequency sensitivity of neural networks, and how this sensitivity varies across the network’s input space. It seems useful for investigating how interventions on network inductive biases impact post-training behaviors (at least, for behaviors related to frequency).

I’d be interested to see how trained networks without an initial frequency bias (see previous paper) compare to those with an initial frequency bias.

Limitations of the NTK for Understanding Generalization in Deep Learning

The ``Neural Tangent Kernel″ (NTK) (Jacot et al 2018), and its empirical variants have been proposed as a proxy to capture certain behaviors of real neural networks. In this work, we study NTKs through the lens of scaling laws, and demonstrate that they fall short of explaining important aspects of neural network generalization. In particular, we demonstrate realistic settings where finite-width neural networks have significantly better data scaling exponents as compared to their corresponding empirical and infinite NTKs at initialization. This reveals a more fundamental difference between the real networks and NTKs, beyond just a few percentage points of test accuracy. Further, we show that even if the empirical NTK is allowed to be pre-trained on a constant number of samples, the kernel scaling does not catch up to the neural network scaling. Finally, we show that the empirical NTK continues to evolve throughout most of the training, in contrast with prior work which suggests that it stabilizes after a few epochs of training. Altogether, our work establishes concrete limitations of the NTK approach in understanding generalization of real networks on natural datasets.

My opinion:

This paper illustrates an important limitation of using empirical estimations of the NTK at specific points in training to track inductive biases. There are important learning dynamics that only appear in aggregate across many SGD steps, including scaling laws apparently. Presumably, a proper theory of NTK evolution over time would let us predict the actual scaling behavior of architectures.

Concluding thoughts about NTK-based accounts of inductive biases:

I think the real bottleneck on using the NTK in alignment is the difficulty of expressing alignment-relevant behaviors (deception, powerseeking, etc) in terms of the inductive biases described by the NTK.

If we can translate from NTK inductive biases to alignment-relevant behaviors, I think we’d be able to use empirically estimated NTKs at points across the network’s training trajectory to get useful estimates of how inclined the network is towards learning those behaviors (rather than needing a theoretical understanding of NTK evolution).

In particular, What can linearized neural networks actually say about generalization? indicates that the NTK can rank the relative learnability of different tasks, even while providing an overall poor estimation of the network’s capabilities. So even noisy estimates from the NTK may suffice to determine whether models end up deceptive or powerseeking.

For translating from NTK inductive biases to alignment-relevant behaviors, I think our best bet is to study the NTKs of pretrained LMs. Probably, their NTKs have been restructured to make semantically meaningful behaviors more learnable. I expect it’s easier to relate such inductive biases to the behaviors we’re interested in.

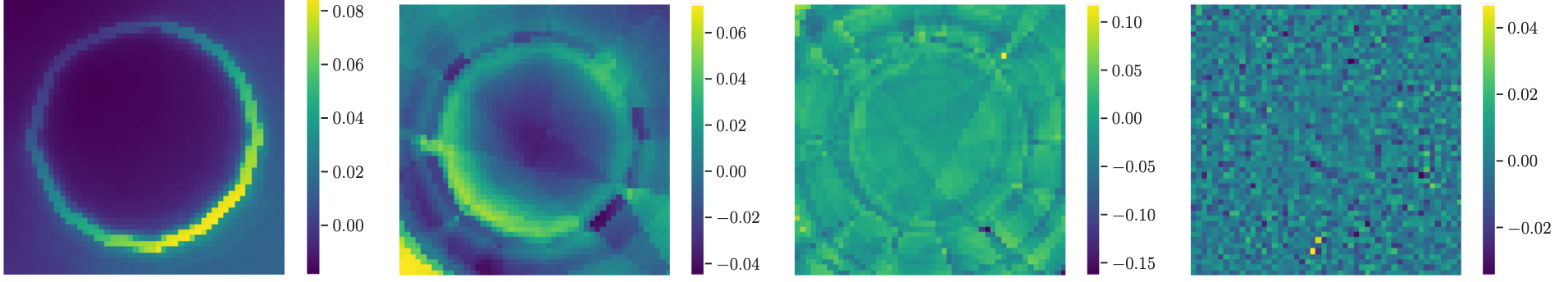

E.g., the Implicit Regularization paper trained on a toy problem of determining whether points were in a disk of radius centered at the origin. Figure 1 shows that the resulting inductive biases align with the task after training:

Of course, empirically estimating the inductive biases of a language model’s NTK after training is going to be very difficult. I’ve not found any papers which attempt such a feat.

At this point in the roundup, we’re moving on from architecture-entangled inductive biases / the NTK, and looking into the inductive biases of SGD itself.

Shift-Curvature, SGD, and Generalization

A longstanding debate surrounds the related hypotheses that low-curvature minima generalize better, and that SGD discourages curvature. We offer a more complete and nuanced view in support of both. First, we show that curvature harms test performance through two new mechanisms, the shift-curvature and bias-curvature, in addition to a known parameter-covariance mechanism. The three curvature-mediated contributions to test performance are reparametrization-invariant although curvature is not. The shift in the shift-curvature is the line connecting train and test local minima, which differ due to dataset sampling or distribution shift. Although the shift is unknown at training time, the shift-curvature can still be mitigated by minimizing overall curvature. Second, we derive a new, explicit SGD steady-state distribution showing that SGD optimizes an effective potential related to but different from train loss, and that SGD noise mediates a trade-off between deep versus low-curvature regions of this effective potential. Third, combining our test performance analysis with the SGD steady state shows that for small SGD noise, the shift-curvature may be the most significant of the three mechanisms. Our experiments confirm the impact of shift-curvature on test loss, and further explore the relationship between SGD noise and curvature.

My opinion:

This paper offers a fairly intuitive explanation for why flatter minima generalize better: suppose the training and testing data have distinct, but nearby, minima that minimize their respective loss. Then, the curvature around the training minima acts as the second order term in a Taylor expansion that approximates the expected test loss for models nearby the training minima.

The paper then investigates the impact of gradient noise from SGD and find that it biases models towards flatter regions of parameter space, even to the point of getting worse training loss.

Implicit Gradient Regularization

Gradient descent can be surprisingly good at optimizing deep neural networks without overfitting and without explicit regularization. We find that the discrete steps of gradient descent implicitly regularize models by penalizing gradient descent trajectories that have large loss gradients. We call this Implicit Gradient Regularization (IGR) and we use backward error analysis to calculate the size of this regularization. We confirm empirically that implicit gradient regularization biases gradient descent toward flat minima, where test errors are small and solutions are robust to noisy parameter perturbations. Furthermore, we demonstrate that the implicit gradient regularization term can be used as an explicit regularizer, allowing us to control this gradient regularization directly. More broadly, our work indicates that backward error analysis is a useful theoretical approach to the perennial question of how learning rate, model size, and parameter regularization interact to determine the properties of overparameterized models optimized with gradient descent.

My opinion:

Even with full-batch gradient descent (so no gradient noise from SGD), it turns out that gradient descent’s discrete steps introduce an inductive bias into network training, and that we can analyze this bias with surprisingly straightforward methods. Like the noise from SGD, this inductive bias also pushes the model towards flatter regions of parameter space.

Limiting Dynamics of SGD: Modified Loss, Phase Space Oscillations, and Anomalous Diffusion

In this work we explore the limiting dynamics of deep neural networks trained with stochastic gradient descent (SGD). As observed previously, long after performance has converged, networks continue to move through parameter space by a process of anomalous diffusion in which distance travelled grows as a power law in the number of gradient updates with a nontrivial exponent. We reveal an intricate interaction between the hyperparameters of optimization, the structure in the gradient noise, and the Hessian matrix at the end of training that explains this anomalous diffusion. To build this understanding, we first derive a continuous-time model for SGD with finite learning rates and batch sizes as an underdamped Langevin equation. We study this equation in the setting of linear regression, where we can derive exact, analytic expressions for the phase space dynamics of the parameters and their instantaneous velocities from initialization to stationarity. Using the Fokker-Planck equation, we show that the key ingredient driving these dynamics is not the original training loss, but rather the combination of a modified loss, which implicitly regularizes the velocity, and probability currents, which cause oscillations in phase space. We identify qualitative and quantitative predictions of this theory in the dynamics of a ResNet-18 model trained on ImageNet. Through the lens of statistical physics, we uncover a mechanistic origin for the anomalous limiting dynamics of deep neural networks trained with SGD.

My opinion:

(see below)

Multiplicative noise and heavy tails in stochastic optimization

Although stochastic optimization is central to modern machine learning, the precise mechanisms underlying its success, and in particular, the precise role of the stochasticity, still remain unclear. Modelling stochastic optimization algorithms as discrete random recurrence relations, we show that multiplicative noise, as it commonly arises due to variance in local rates of convergence, results in heavy-tailed stationary behaviour in the parameters. A detailed analysis is conducted for SGD applied to a simple linear regression problem, followed by theoretical results for a much larger class of models (including non-linear and non-convex) and optimizers (including momentum, Adam, and stochastic Newton), demonstrating that our qualitative results hold much more generally. In each case, we describe dependence on key factors, including step size, batch size, and data variability, all of which exhibit similar qualitative behavior to recent empirical results on state-of-the-art neural network models from computer vision and natural language processing. Furthermore, we empirically demonstrate how multiplicative noise and heavy-tailed structure improve capacity for basin hopping and exploration of non-convex loss surfaces, over commonly-considered stochastic dynamics with only additive noise and light-tailed structure.

My opinion:

These papers dive more deeply into the structure and effects of gradient noise, modeling SGD as a diffusion process while making different assumptions about the structure of the gradient noise. They point to a picture where the ratio of learning rate to batch size controls a sort of exploration bias of SGD towards broader basins.

One interesting thing to note about these inductive biases from the optimizer is that the human brain probably has very similar inductive biases. E.g., the inductive bias found in Implicit Gradient Regularization happens because SGD does not take optimally sized steps for reducing training loss at each update. It seems very unlikely that brain neurons do make optimal updates to minimize predictive error, which probably leads the brain to also steer towards flatter regions of its parameter space.

Similarly, the brain’s optimization process seems pretty noisy (and has batch size one), so the brain probably also mirrors the inductive biases that come from the noise in SGD updates.

Towards Theoretically Understanding Why SGD Generalizes Better Than ADAM in Deep Learning

It is not clear yet why ADAM-alike adaptive gradient algorithms suffer from worse generalization performance than SGD despite their faster training speed. This work aims to provide understandings on this generalization gap by analyzing their local convergence behaviors. Specifically, we observe the heavy tails of gradient noise in these algorithms. This motivates us to analyze these algorithms through their Levy-driven stochastic differential equations (SDEs) because of the similar convergence behaviors of an algorithm and its SDE. Then we establish the escaping time of these SDEs from a local basin. The result shows that (1) the escaping time of both SGD and ADAM~depends on the Radon measure of the basin positively and the heaviness of gradient noise negatively; (2) for the same basin, SGD enjoys smaller escaping time than ADAM, mainly because (a) the geometry adaptation in ADAM~via adaptively scaling each gradient coordinate well diminishes the anisotropic structure in gradient noise and results in larger Radon measure of a basin; (b) the exponential gradient average in ADAM~smooths its gradient and leads to lighter gradient noise tails than SGD. So SGD is more locally unstable than ADAM~at sharp minima defined as the minima whose local basins have small Radon measure, and can better escape from them to flatter ones with larger Radon measure. As flat minima here which often refer to the minima at flat or asymmetric basins/valleys often generalize better than sharp ones, our result explains the better generalization performance of SGD over ADAM. Finally, experimental results confirm our heavy-tailed gradient noise assumption and theoretical affirmation.

My opinion:

This is a very cool paper. It offers a (pretty plausible, IMO) account of how and why the two optimizers have different inductive biases. I think it’s a very good sign that we know enough about gradient descent that we can perform these sorts of analyses.

The Low-Rank Simplicity Bias in Deep Networks

Modern deep neural networks are highly over-parameterized compared to the data on which they are trained, yet they often generalize remarkably well. A flurry of recent work has asked: why do deep networks not overfit to their training data? In this work, we make a series of empirical observations that investigate and extend the hypothesis that deeper networks are inductively biased to find solutions with lower effective rank embeddings. We conjecture that this bias exists because the volume of functions that maps to low effective rank embedding increases with depth. We show empirically that our claim holds true on finite width linear and non-linear models on practical learning paradigms and show that on natural data, these are often the solutions that generalize well. We then show that the simplicity bias exists at both initialization and after training and is resilient to hyper-parameters and learning methods. We further demonstrate how linear over-parameterization of deep non-linear models can be used to induce low-rank bias, improving generalization performance on CIFAR and ImageNet without changing the modeling capacity.

My opinion:

The core intuition of this paper is that, when you multiply a bunch of matrices together, the rank of the composite operator is no higher than that of the lowest rank component matrix. Models that operate by multiply matrices together are thus biased towards implementing low-rank functions. Of course, adding a residual connection will control this bias, and means information flow is no longer bottlenecked by the lowest rank matrix in the model.

On the Implicit Bias Towards Minimal Depth of Deep Neural Networks

Recent results in the literature suggest that the penultimate (second-to-last) layer representations of neural networks that are trained for classification exhibit a clustering property called neural collapse (NC). We study the implicit bias of stochastic gradient descent (SGD) in favor of low-depth solutions when training deep neural networks. We characterize a notion of effective depth that measures the first layer for which sample embeddings are separable using the nearest-class center classifier. Furthermore, we hypothesize and empirically show that SGD implicitly selects neural networks of small effective depths.

Secondly, while neural collapse emerges even when generalization should be impossible—we argue that the degree of separability in the intermediate layers is related to generalization. We derive a generalization bound based on comparing the effective depth of the network with the minimal depth required to fit the same dataset with partially corrupted labels. Remarkably, this bound provides non-trivial estimations of the test performance. Finally, we empirically show that the effective depth of a trained neural network monotonically increases when increasing the number of random labels in data.

My opinion:

This paper reflects my intuition that gradient descent is biased towards short paths, possibly because longer paths lose rank too quickly?

Why neural networks find simple solutions: the many regularizers of geometric complexity

In many contexts, simpler models are preferable to more complex models and the control of this model complexity is the goal for many methods in machine learning such as regularization, hyperparameter tuning and architecture design. In deep learning, it has been difficult to understand the underlying mechanisms of complexity control, since many traditional measures are not naturally suitable for deep neural networks. Here we develop the notion of geometric complexity, which is a measure of the variability of the model function, computed using a discrete Dirichlet energy. Using a combination of theoretical arguments and empirical results, we show that many common training heuristics such as parameter norm regularization, spectral norm regularization, flatness regularization, implicit gradient regularization, noise regularization and the choice of parameter initialization all act to control geometric complexity, providing a unifying framework in which to characterize the behavior of deep learning models.

My opinion:

I think that something like geometric simplicity bias is at the core of how neural networks learn general solutions. Neural networks mostly seem to model the union of low dimensional manifolds on which their input data lie, then sort of extrapolate the geometry of those manifolds to unseen data. Sudden deviations from the manifold geometry would lead to higher geometric complexity. Learning processes biased towards low geometric complexity tend not to have such deviations.

The Pitfalls of Simplicity Bias in Neural Networks

Several works have proposed Simplicity Bias (SB)---the tendency of standard training procedures such as Stochastic Gradient Descent (SGD) to find simple models—to justify why neural networks generalize well [Arpit et al. 2017, Nakkiran et al. 2019, Soudry et al. 2018]. However, the precise notion of simplicity remains vague. Furthermore, previous settings that use SB to theoretically justify why neural networks generalize well do not simultaneously capture the non-robustness of neural networks—a widely observed phenomenon in practice [Goodfellow et al. 2014, Jo and Bengio 2017]. We attempt to reconcile SB and the superior standard generalization of neural networks with the non-robustness observed in practice by designing datasets that (a) incorporate a precise notion of simplicity, (b) comprise multiple predictive features with varying levels of simplicity, and (c) capture the non-robustness of neural networks trained on real data. Through theory and empirics on these datasets, we make four observations: (i) SB of SGD and variants can be extreme: neural networks can exclusively rely on the simplest feature and remain invariant to all predictive complex features. (ii) The extreme aspect of SB could explain why seemingly benign distribution shifts and small adversarial perturbations significantly degrade model performance. (iii) Contrary to conventional wisdom, SB can also hurt generalization on the same data distribution, as SB persists even when the simplest feature has less predictive power than the more complex features. (iv) Common approaches to improve generalization and robustness—ensembles and adversarial training—can fail in mitigating SB and its pitfalls. Given the role of SB in training neural networks, we hope that the proposed datasets and methods serve as an effective testbed to evaluate novel algorithmic approaches aimed at avoiding the pitfalls of SB.

My opinion:

This paper shows just how strong neural network simplicity biases are, and also gives some intuition for how the simplicity bias of neural networks is different from something like a circuit simplicity bias or Kolmogorov simplicity bias. E.g., neural networks don’t seem all that opposed to memorization. The paper shows examples of neural networks learning a simple linear feature which imperfectly classifies the data, then memorizing the remaining noise, despite there being a slightly more complex feature which perfectly classifies the training data (and I’ve checked, there’s no grokking phase transition, even after 2.5 million optimization steps with weight decay).

It also shows how, depending on the data you’re trying to model, a simplicity bias may actually harm generalization.

Conclusion

My biggest takeaway from this review is that SGD has a lot of inductive biases. Even something as simple as the fact that SGD takes discrete, non-optimal update steps leads to systematic bias in the sorts of solutions found. Probably, there are lots of other inductive biases coming from interactions between architecture, data and optimizer.

Also, inductive bias research is making a lot of progress. In particular, the NTK perspective on inductive bias seems to be quickly moving in a potentially valuable direction. If we can reach an okayish understanding of how the NTK evolves over training, and how the inductive biases supplied by the NTK relate to high-level cognitive properties, that might give us something like a non-closed-form account of path-dependent inductive biases.

I’ve also updated towards humans and AIs having similar inductive biases. There are some inductive biases that I think we straight up share with AIs, such as those that come from making non-optimal / noisy parameter updates. I also think that humans have a fair bit of geometric simplicity bias, as indicated by the fact that most small perturbations to our visual / auditory inputs do not have very large impacts on how we process those inputs.

I hope readers find these papers useful for their own research. Please feel free to discuss the listed papers in the comments or recommend additional papers to me.

Honorable mentions

These are interesting papers that are related to inductive biases, but which I decided not to include in the roundup, both because I didn’t want to make the post too long, and because I’ve delayed the post long enough already.

Gradient Starvation: A Learning Proclivity in Neural Networks

SGD on Neural Networks Learns Functions of Increasing Complexity

Let’s Agree to Agree: Neural Networks Share Classification Order on Real Datasets

Learning through atypical ”phase transitions” in overparameterized neural networks

Towards understanding deep learning with the natural clustering prior

Residual Networks Behave Like Ensembles of Relatively Shallow Networks

The Shattered Gradients Problem: If resnets are the answer, then what is the question?

Future

For next week’s roundup, I’m thinking the focus will be on techniques for chain of thought language models.

My other candidate focuses are:

Shape versus texture bias in neural nets / humans

Diffusion models

Controllable text generation

Structure and content of language model internal representations

Let me know if there are any topics you’re particularly interested in.

- When is Goodhart catastrophic? by (9 May 2023 3:59 UTC; 177 points)

- EA & LW Forums Weekly Summary (10 − 16 Oct 22′) by (EA Forum; 17 Oct 2022 22:51 UTC; 24 points)

- EA & LW Forums Weekly Summary (10 − 16 Oct 22′) by (17 Oct 2022 22:51 UTC; 12 points)

- 's comment on All AGI Safety questions welcome (especially basic ones) [July 2023] by (21 Jul 2023 20:13 UTC; 3 points)

I feel like this explanation is just restating the question. Why are the minima of the test and training data often close to each other? What makes reality be that way?

You can come up with some explanation involving mumble mumble fine-tuning, but I feel like that just leaves us where we started.

My intuition: small changes to most parameters don’t influence behavior that much, especially if you’re in a flat basin. The local region in parameter space thus contains many possible small variations in model behavior. The behavior that solves the training data is similar to that which solves the test data, due to them being drawn from the same distribution. It’s thus likely that a nearby region in parameter space is a minima for the test data.