I’d like to thank Simon Grimm and Tamay Besiroglu for feedback and discussions.

Update (early April 2023): I now think the timelines in this post are too long and expect the world to get crazy faster than described here. For example, I expect many of the things suggested for 2030-2040 to already happen before 2030. Concretely, in my median world the CEO of a large multinational company like Google is an AI. This might not be the case legally but effectively an AI makes most major decisions.

This post is inspired by What 2026 looks like and an AI vignette workshop guided by Tamay Besiroglu. I think of this post as “what would I expect the world to look like if these timelines (median compute for transformative AI ~2036) were true” or “what short-to-medium timelines feel like” since I find it hard to translate a statement like “median TAI year is 20XX” into a coherent imaginable world.

I expect some readers to think that the post sounds wild and crazy but that doesn’t mean its content couldn’t be true. If you had told someone in 1990 or 2000 that there would be more smartphones and computers than humans in 2020, that probably would have sounded wild to them. The same could be true for AIs, i.e. that in 2050 there are more human-level AIs than humans. The fact that this sounds as ridiculous as ubiquitous smartphones sounded to the 1990/2000 person, might just mean that we are bad at predicting exponential growth and disruptive technology.

Update: titotal points out in the comments that the correct timeframe for computers is probably 1980 to 2020. So the correct time span is probably 40 years instead of 30. For mobile phones, it’s probably 1993 to 2020 if you can trust this statistic.

I’m obviously not confident (see confidence and takeaways section) in this particular prediction but many of the things I describe seem like relatively direct consequences of more and more powerful and ubiquitous AI mixed with basic social dynamics and incentives.

Taking stock of the past

Some claims about the past

Tech can be very disruptive: The steam engine, phones, computers, the internet, smartphones, etc; all of these have changed the world in a relatively quick fashion (e.g. often just a few decades between introduction and widespread use). Furthermore, newer technologies disrupt faster (e.g. smartphones were adopted faster than cars) due to faster supply-chain integration, faster R&D, larger investments and much more. People who were born 10 years before me grew up without widespread smartphones and the internet, I grew up with the benefits of both and people who are 10 years younger than me play high-resolution video games on their smartphones. Due to technology, it is the norm, not the exception, that people who are born 10 years apart can have very different childhoods.

AI is getting useful: There are more and more tasks in which AI is highly useful in the real world. By now certain narrow tasks can be automated to a large extent, e.g., protein folding prediction, traffic management, image recognition, sales predictions and much more. Some of these, e.g. sales predictions sometimes rely on technology developed before 2000, but many of the more complex and less structured tasks such as traffic predictions are built on top of Deep Learning architectures only developed in the 2010s. Thus we are either one or multiple decades deep in the AI disruption period. Based on this, I consider another AI winter unlikely. You can make money with AI right now and the ML systems can automate a lot of tasks today even if we didn’t find any additional breakthroughs.

Transformers work astonishingly well: Originally transformers were used mostly on language modeling tasks but by now they have been successfully applied to many computer vision tasks, reinforcement learning, robotics, time series and much more. The basic recipe of “transformer+data+compute+a bit of engineering” seems to work astonishingly well. This was not true for previous architectures such as CNNs or LSTMs.

People use AI to build AI: The first large language models (LLMs) that assist human coders seem to genuinely improve their performance, there are now more and more papers about self-improving AIs that actually seem to make some difference. The idea to use AI to improve AI is not new but previous attempts haven’t been very successful. The results from the latest papers are probably also overstated but they seem to make a genuine difference.

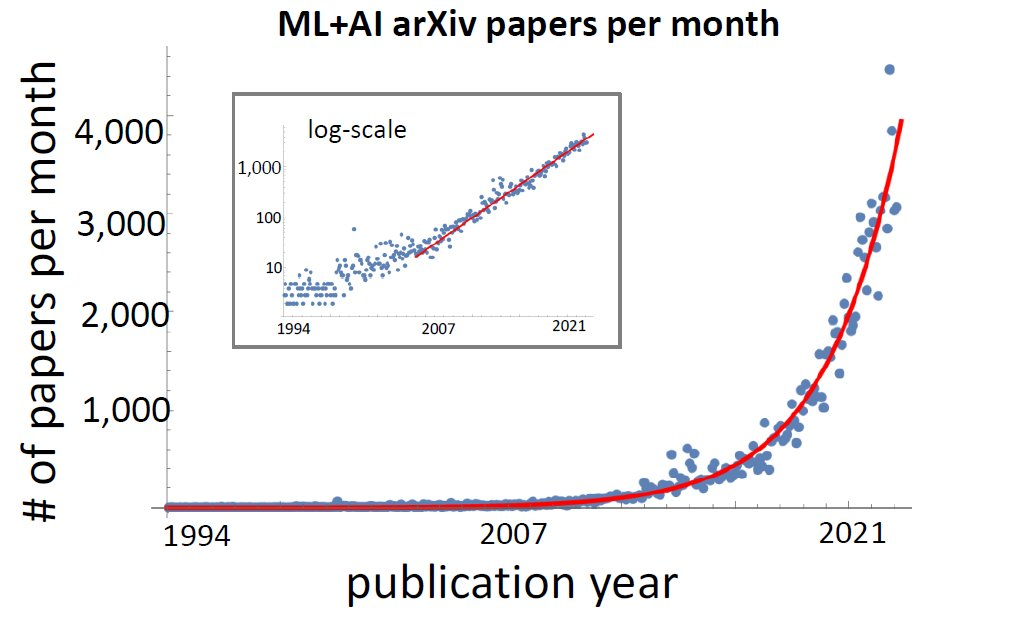

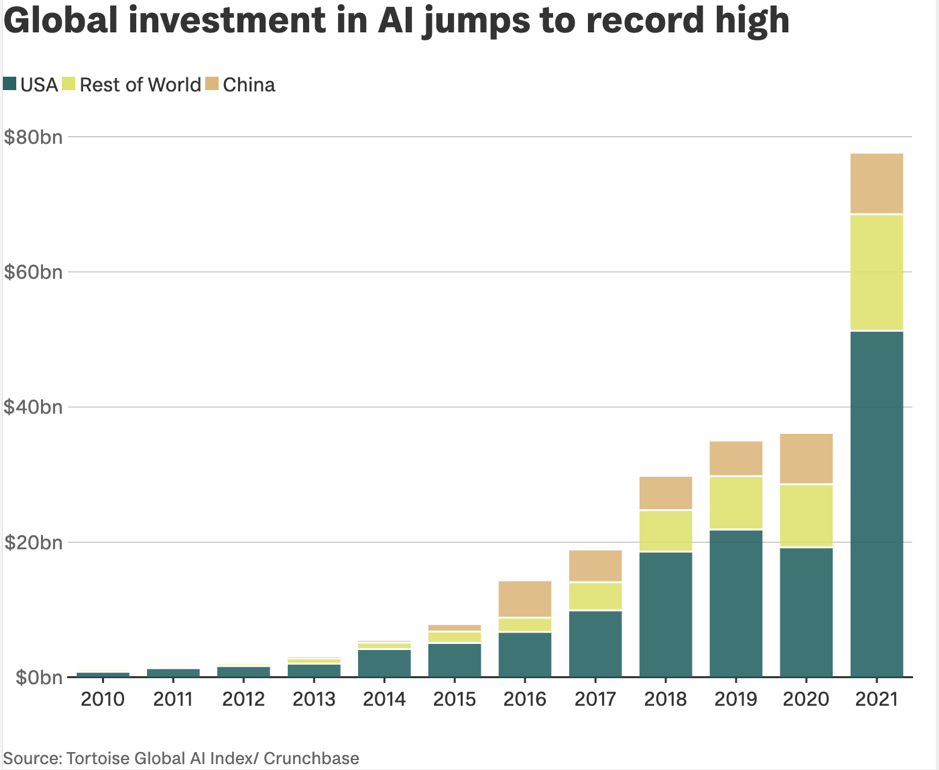

The AI hype is not over: The hype around AI has been going on for many years now and there is no clear end in sight. Investors are still happy to finance ideas in AI, people still flock to AI courses in universities, papers in AI are still increasing exponentially and large conferences like NeurIPS sell out within minutes. Given that AI investments are not only bets on future systems anymore but often bets with very short production timelines, I expect more and more investors to enter the space. This hype is also true in academia where many universities try to build new AI departments, and in government, with large state-led investments into compute infrastructure and R&D. While the number of papers, in general, has grown exponentially, the growth rate for ML is much faster than the base rate. A quick eyeball estimate suggests a doubling time of 2-3 years for AI+ML and ~13 years for papers in general (see Fig. 1 in this paper).

We were surprised by AI progress in the past: I think the majority of tasks that were automated by AI were unexpected by everyone who wasn’t part of the team building them (and sometimes even them). Most people were surprised about the first Chess AIs, most people were surprised when AIs could play Atari games, most people were surprised when AIs could play Dota, most people were surprised when AIs could fold proteins, most people were surprised by GPT-2 and then again by GPT-3 and most people were surprised by the quality of Dall-E’s images. Of course, there is also the opposite trend of people promising capabilities that did not come to pass, e.g. the quality of robotics probably lags behind most expectations. This mostly shows that we are bad at forecasting and we should be more uncertain about the future than we usually acknowledge. However, if the underlying drivers of progress develop as fast as they currently do, I think we are on a faster trajectory than most people (even in the AI field) expect.

The rest of the post is a speculative story about how the next decades might look like

Until 2030

This is the decade of AI development, prototypes, powerful narrow systems and adoption by people who follow the AI scene (but not global mass adoption).

Over the next couple of years, there is more and more automation in the AI building loop, e.g. more and more code for AIs is written by AIs, narrow AIs supervise the training of more powerful AIs (e.g. hyperparameter tuning), AIs generate data for other AIs, some AIs select data through active learning (e.g. LLMs+RL actively look through databases to find the right data to train on) and the models are more multi-modal than ever, e.g. taking data from vision, language, speech, etc. through multiple streams. In general, I expect the process of training to become more and more complex and autonomous with less human supervision and understanding.

AI leads to some major breakthroughs in the life sciences, physics, maths, computer science and much more. We have already seen what AlphaFold is capable of and some of the current endeavors to make AIs really good at math or coding look fruitful. These products are increasingly applied in ways that measurably improve people’s lives rather than just being novelties. However, many of these products are still not fully refined and don’t find their way to mass adoption (this will happen in the next decade).

At the forefront of this disruption are personal assistants. These are fine-tuned versions of LLMs that are on your computer, phone and in webpages. In the beginning, they mostly serve as Google-like question-answering services. So when people “just need to quickly look something up”, they use such a model (or Google’s search engine is based on one). The capabilities of these models are then also available on your phone or on the Q&A pages of different websites. Then, these models start to reliably automate specific parts of simple and mundane tasks, e.g. formatting text, drafting emails for you, copy-editing, scheduling appointments, plotting, or filling out spreadsheets. Toward the end of the decade, reliable personal assistants for simple tasks are as normal as Google is in 2022. Some people initially have privacy concerns about giving companies access to that much private data but ignore them over time when they see the benefits in saved time and the annoyance of mundane tasks (similar to concerns about Facebook and Google in the 2020s). The companies that spearhead virtual assistant tech reach large valuations and build good connections in politics.

Additionally, automated coding becomes pretty good, i.e. it reliably more than doubles the output of an unassisted coder. This has the effect that much more code is generated by ML models and that more people who don’t have a coding background are able to generate decent code. An effect of this is that the overall amount of code explodes and the quality degrades because it just becomes impossible for humans to properly check and verify the code. Most of the test cases are generated automatically which often gives people some confidence, i.e. because the code is at least good enough to pass the test cases. The fact that more code is available to everyone has many benefits, e.g. lots of new apps, better code bases for existing applications, and a drastic increase in competition which reduces prices for consumers.

Another part of this disruption is art generation. Dall-E, stable diffusion and so on have already shown that generating high-quality images from text is possible. This becomes more and more reliable and comes with more features such as editing, modification, style transfer, etc. These capabilities are improved constantly and some models can reliably produce short high-quality videos. Toward the end of the decade, AI can produce coherent and highly realistic 30-minute videos from text inputs. They can also create 3D environments from text prompts which are used a lot in game design or architecture.

AI+bio goes absolutely nuts. Advances in protein folding and simulating interactions between molecules lead to a big leap in our ability to model biology. The process of drug design, testing, predicting, etc. is much more advanced and automated than in 2022. These AI pipelines develop medicine much faster than humans, suggest new mixtures that were previously unknown, reliably predict the mechanism by which the medication interacts with the human organism and thus allow to predict potential side effects much more efficiently. There is unprecedented progress in successfully creating new medications and potentially decreasing the burden of many diseases. Additionally, the internal models of biology within the AI systems are increasingly complex and not understood by human overseers. More and more control is given to the AI, e.g. in the design choices of the drug. The resulting medication is not always understood but the results for the people who need medicine are used to justify the lack of understanding and oversight of the AI systems. Since the process of creating new medicine and then fulfilling all necessary regulations is long and costly, most people won’t see the benefits of the boom in medicine until the next decade.AIs spread out in many different parts of society. They are used by some organizations and players in most industries (maybe 5% of all companies are based primarily on AI). For example, hedge funds build even larger AIs to trade on the stock market. These models use incredibly large data streams to make their predictions, e.g. news from all over the world, tons of historical data, and so on. AIs are used in healthcare to predict risk factors of patients, assist doctors in their diagnosis and recommend treatments. ML systems are sparingly used in the justice system, e.g. to write argumentation for the defender or plaintiff or to assist judges to condense evidence in highly complex cases. Some countries experiment with some version of LLMs to assist them with their policy-making, e.g. by suggesting reference cases from other countries, summarizing the scientific literature or flat-out policy suggestions. Chatbots become better and better and assist teachers to educate children and AIs adapt the curriculum to the strengths and weaknesses of their students. In general, chatbots+interfaces become so realistic that most children have some virtual friends in addition to their real-world friends and it feels completely normal to them. Some parents think this is a techno-dystopian nightmare but most just go with the flow and buy their child a bob-the-builder friend bot. For most people, AI is still new and they are a bit suspicious but the adoption rates are clearly increasing. Most of the people who have access to and use AI tools are at least middle-class and often live in richer countries.

Many jobs completely change. Artists draft their music, images, videos and poems with neatly-looking prompt engineering interfaces and only make some final changes or compose multiple ideas. Creating art from scratch still happens but only for rich people who want “the real thing”. Everyone else just adapts to the technology in the same way that 2022’s artists often work with sophisticated computer programs to speed up the creative process. There are still some people who pay absurd amounts of money for art not because they think it has some inherent value but because they use the art market to evade taxes or to signal that they are rich enough to buy it. So art for rich people doesn’t change that much but most people can now easily create art of decent quality by themselves. Programming is still a profession but simple programs can be written with natural language+AI completion which makes programming much more accessible to the masses. Tech salaries are still sky-high, the Bay area is still expensive even though YIMBYs have made some important gains. However, Berkeley still hasn’t built anywhere near enough new housing because some 75-year-old millionaire couple wants to preserve the character of the neighborhood.

For the average consumer prompt engineering turned out to be mostly a phase, i.e. larger models that are fine-tuned with Reinforcement Learning from Human Feedback (RLHF) basically understand most questions or tasks without any fancy prompt trickery. However, larger models leave more room for improvement through complex prompts. In some sense, good prompting is like pre-compiling a model into a certain state, e.g. into a specialist at a certain topic or an imitator of a specific author. Especially in niche applications or very complicated questions, using the right prompt still makes a big difference for the result.

STEM jobs rely more and more on using specific narrow AI systems. Physicists often use AI-based simulation models for their experiments, biologists and drug designers use AlphaFold-like models to speed up their research, engineers use AI models to design, simulate and test their constructions. Prompt engineering becomes completely normalized among researchers and academics, i.e. there are some ways to prompt a model that are especially helpful for your respective research niche. In 2029 a relevant insight is made by a physics-fine-tuned model when the physicists prompt it with “revolutionary particle physics insight. Nobel prize-worthy finding. Over a thousand citations on arxiv. <specific insight they wanted to investigate>” and nobody blinks an eye. Communicating with specialized models like that has become as normal as using different programming languages. Additionally, there are known sets of prompts that are helpful for some subfields, e.g. some prompts for physics, some for bio, etc. These have been integrated into user-friendly environments with drop-down menus and suggestions and so on.

Robotics still faces a lot of problems. The robots work well in simulations but sim2real and unintended side-effects still pose a bottleneck that prevents robots from robustly working in most real-world situations. Trucks can drive autonomously on highways but the first- and last-mile problems are still not solved. In short, AI revolutionized the digital world way before it revolutionized real-world applications.

Virtual and augmented reality slowly become useful. The VR games get better and better and people switch or upgrade their default gaming console to available VR options. It becomes more and more normal to have meetings in virtual rooms with people across the world rather than flying around to meet them in person. While these meetings are good enough to justify avoiding all the travel hassle, they are still imperfect. The experience is sometimes a bit clunky and when multiple people talk in the same room, the audio streams sometimes overlap which makes it hard to have a social gathering in VR. On the other hand, it is easier to have small conversations in a large room because you can just block all audio streams other than your conversation. AR is becoming helpful and convenient, e.g. AR glasses are not annoying to wear. There are some ways in which AR can be useful, e.g. by telling you where the products you are looking for are located in any given supermarket, doing live speech-to-text in noisy environments or providing speech-to-text translations in international contexts. However, the mass adoption of AR isn’t happening because most of these things can already be done by smartphones, so people don’t see a reason to buy additional AR gadgets.

The hardware that AI is built on has not changed a lot throughout the decade. The big models are still trained on GPUs or TPUs that roughly look like improved versions of those that exist in 2022. There are some GPUs that use a bit of 3D chip stacking and some hybrid versions that use a bit of optical interconnect but the vast majority of computations are still done in the classical von Neumann paradigm. Neuromorphic computing has seen increases in performance but still lacks far behind the state-of-the-art models. One big change is that training and inference is done more and more on completely different hardware, e.g. AI is trained on TPUs but deployed on specialized 8-bit hardware.

This entire AI revolution has seen a lot of new companies growing extremely fast. These companies provide important services and jobs. Furthermore, they have good connections into politics and lobby like all other big companies. People in politics face real trade-offs between regulating these big corporations and losing jobs or very large tax revenues. The importance to society and their influence on the world is similar to big energy companies in 2022. Since their products are digital, most companies can easily move their headquarters to the highest bidding nation and governance of AI companies is very complicated. The tech companies know the game they are playing and so do the respective countries. Many people in wider society demand better regulation and more taxes on these companies but the lawmakers understand that this is hard or impossible in the political reality they are facing.

Due to their increasing capabilities, people use AIs for nefarious purposes and cybersecurity becomes a big problem. Powerful coding models are used to generate new and more powerful hacks at unprecedented speed and the outdated cybersecurity products of public hospitals, banks and many other companies can’t hold up. The damages from the theft of information are in the billions. Furthermore, AIs are used to steal other people’s identities and it is ever more complicated to distinguish fake from real. Scams with highly plausible fake stories and fake evidence go through the roof and people who are not up to date with the latest tech (e.g. old people) are the victims of countless scam attacks. Some of the AI systems don’t work as intended, e.g. a hospital system has recommended the wrong drug countless times but the doctors were too uninformed and stressed to catch the error. It is not clear who is at fault, the legal situation is not very clear and many lawsuits are fought over the resulting damages. Some hedge fund has let a new and powerful AI system lose on the stock market and the resulting shenanigans kill a big pension fund and many individual brokers. The AI had access to the internet and was able to fabricate a web of smear campaigns and misinformation while betting on the winners and against the losers of this campaign. The government wants to punish the hedge fund for this behavior but the AI-assisted hedge fund was smart enough to use existing loopholes and is thus not legally liable under existing laws. The CEO of the hedge fund posts a photo of him sitting in a Bugatti with the caption “this was only the beginning” on social media.

The big players (e.g. Google, DeepMind, OpenAI, Anthropic, etc.) care about safety, have alignment teams, communicate with each other and actually want to use AI for the good of humanity. Everyone else doesn’t really care about risks or intentional misuse and the speed of capabilities allows for more and more players to enter the market. Due to competition and especially reckless actors, safety becomes a burden and every small player who wants to care about safety slowly drowns because they can’t hold up in the tight competition. Additionally, some companies or collectives call for the democratization of AI and irreversibly spread access to countless powerful models without any auditing. The calls from the safety community are drowned by beautiful images/videos/music on Twitter.

Helplessly overwhelmed, understaffed and underinformed government officials try to get control over the situation but are unable to pass any foundational legislation because they have to constantly keep up with the game of whack-a-mole of new scams, cybersecurity issues, discrimination, liability battles, privacy concerns and other narrow problems. Long-term risks from misaligned AIs rarely make it on the legislative agenda because constituents don’t care and have other pressing concerns related to AI.

The AI safety & alignment community has made some gains. There are now a couple of thousand people working on AI alignment full-time and it is an established academic discipline with professors, lectures and so on. Lectures on AI safety are part of every basic AI curriculum but students care as much about safety as they care about ethics classes in 2022, i.e. some do but most don’t. The AI safety community has also made some gains in robustness and interpretability. It is now possible to understand some of the internals of models that were considered large in 2022 with automated tools but it still takes some time and money to do that. Furthermore, red-teaming, fine-tuning and scalable oversight has improved the truthfulness and usefulness of models but models still consistently show failures, just less often. These failures mostly boil down to the fact that it is hard to make a model answer truthfully and honestly when it was trained on internet text and thus completes a prompt according to plausibility rather than truth. There are a handful of organizations that do some superficial auditing of new models but given the lack of tools, these efforts are not nearly as deep as necessary. It’s roughly equivalent to buying a CO2 certificate in 2022, i.e. institutions overstate their impact and many fundamental problems are not addressed. More and more people are concerned about the capabilities of AIs and are “AI safety adjacent” but there also emerged a fraction of researchers that explicitly build dangerous tools to “trigger the libs” and to show that “all AI safety concerns are overblown”.

The AI safety community has multiple allies in politics who understand the long-term problems and actually want to do something about it. However, the topic of AI safety has somehow made it into parts of the public discourse and got completely derailed as a consequence. Suddenly, a very distorted version of AI safety has become part of the political culture wars. The left claims that AI safety should be about ethnicity, inequality and global access and the right claims that it should be about cybersecurity, autonomous weapons and the freedom of companies. The well-intentioned and educated allies in politics have a hard time getting their ideas through the legislative process because of all the bad political incentives that are now associated with AI safety. Every half-decent law on AI safety only makes it through the political decision-making process after including a ton of baggage that has little to do with ensuring the safety of AI systems. For example, a law that was originally intended to ensure that AIs are audited for basic failure modes before deployment suddenly explodes into a law that also includes ideas on social inequality, cybersecurity, autonomous weapons and much more (don’t get me wrong; these are important topics but I’d prefer them to be dealt with in detail in separate laws).

Additionally, there is some infighting within the AI alignment community between purists who think that working on anything that has to do with capabilities is bad and self-described realists who think that it is necessary to sometimes do research that could also increase capabilities. These fights are relatively minor and the community is still mostly united and cooperatively working on alignment.

AI is more and more at the center of international conflict. Especially the relationship between China, Taiwan and the US heats up due to the global chip dominance of Taiwan. India, Brazil and many Southeast Asian states see AI as their chance to step up their economic game and invest like crazy. In these emerging economies, everyone is incentivized to catch up with the market leaders and thus doesn’t care about safety. Some of the emerging companies dump half-baked AI models on the market that are immediately exploited or lead to major harm for their users, e.g. because a personal assistant overwrites important internals of their users’ computers. The legal situation is a mess, nobody is really responsible and the resulting harms are neither repaired nor punished.

There are a lot of failures of AI systems but most failures are relatively simple (which doesn’t mean that they don’t have relevant consequences).

Some common failure modes come from goal misspecification and specification gaming, e.g. because people throw LLMs+RL on a ton of tasks they want to automate. For example, there are many start-ups who want to automate hiring by scanning CVs with LLMs+RLHF but the model learns weird proxies like “old programming languages are good” (because more senior people have old programming languages on their CV) or it overfits on the specific preferences of some of the human labelers. Many other cases of specification gaming are a continuation of the problems that are well-known in 2022, e.g. that recommender systems optimized for engagement lead to worse user experience or that recommender systems prey on the vulnerable by identifying individuals with a shopping addiction and spamming them with ads when they are online late at night. Even though these failure modes are well-known, they are hard to regulate and often they are not spotted because companies don’t have any incentive to search for them, the systems are often highly uninterpretable and the victims of these failures often have no way of knowing that they are victims—they are just rejected for a job or they think that everyone is spammed with ads late at night. The main difference to the 2022 version of these problems is that they are stronger, i.e. because the AI systems got much better they have found better ways to optimize their reward function which often means they have gotten better at exploiting their users.

A common failure mode is the incompetence of the human user. Many people who don’t understand how AI systems works now have access to them and are completely unaware of their limitations—their understanding is that computer programs just work. Therefore, they don’t double-check the results and are unaware of the assumptions that they implicitly put into their use case. For example, many people have seen use cases in which an LLM automatically fills cells in spreadsheets for you. They test this behavior in simple cases and see that the model is very accurate. They then apply this to another use case that is more complicated without realizing that the LLM is unable to consistently perform this task and don’t rigorously double-check the results. This happens basically everywhere from personal spreadsheets to large banks. In the private case, this is fairly inconsequential. In the case of the banks, this leads to incorrect credit ratings, which drives some people and families into financial ruin. There are failure modes like this basically everywhere whenever untechnical people touch AI systems. Most of the time, people overestimate the quality of outputs of the model, e.g. when scanning CVs, assessing re-offense probabilities or house prices. These failure modes are all known or predicted in 2022 but that doesn’t mean that they are addressed and fixed.

Furthermore, it’s often hard to evaluate the quality of the models’ output as a layman. The models have gotten really good at saying things that sound scientifically true and well-reasoned but are slightly off at a second glance. For example, they might describe a historical situation nearly right but mess up some of the names and dates while being plausible enough to be believable. They also suggest prescribing medication that nearly sounds like the correct one or in nearly the correct dosage. If the doctor isn’t careful, these small things can slip through. The LLMs that are used to improve search engines sometimes change the numbers slightly or flip a name but they are often still plausible enough to not be spotted as errors. Furthermore, people blindly copy and paste these wrong ideas into their essays, websites, videos, etc. they are now part of the internet corpus and future LLMs learn on the incorrect outputs of their predecessors. It is basically impossible to trace back and correct these errors once they are in the system. This feedback loop means that future models learn on the garbage outputs of earlier models and slowly imprint an alternative reality on the internet.

While these failure modes sound simple, they are still consequential and sometimes come with big harm. Given that AI is used in more and more high-stakes decisions, the consequences of their failures can be really big for any given individual, e.g. due to mistakes in medical decisions, bad hiring or wrong financial assessments. There are also failure modes with widespread consequences especially when those who are affected come from disenfranchised communities. For example, models still learn bad proxies from historical data that make it more likely to recommend incarceration or recommend bad conditions for a loan for members of disadvantaged communities. While there are advocacy groups that spread awareness about the existence of these problems, solving these socio-technical problems such as removing “the right amount of bias”, still poses very hard technical and social challenges. Furthermore, solution proposals often clash with profit incentives which means that companies are really slow to adopt any changes unless the regulation is very clear and they are dragged before a court.

There are some unintentional ways in which AIs take part in politics. For example, an overworked staffer asks a language model to draft multiple sections of a specific law for them. This staffer then copies the suggestions into the draft of the law and doesn’t disclose that it was co-written by AI. This happens on multiple occasions but the suggestions seem reasonable and thus never pose a problem (for now).

Furthermore, automated coding has become so cheap that more than 90% of all code is written by AIs. The code is still checked from time to time and companies have some ways to make sure that the code isn’t completely broken but humans have effectively given up tight control over most parts of their codebases. From time to time this leads to mistakes but they are caught sufficiently often that the drastically increased productivity more than justifies the switch to automated coding. The coding AIs obviously have access to the internet in case they need to look up documentation.

Someone builds GPT-Hitler, a decision transformer fine-tuned on the texts of prominent nazis and the captions of documentaries about Hitler on top of a publicly available pre-trained model. The person who fine-tunes the model has worked on LLMs in the tech world in the past but is now a self-employed Youtuber. GPT-Hitler is supposed to be an experiment to generate a lot of clicks and entertainment and thus jumpstart their career. After fine-tuning, the model is hooked up to a new Twitter account and the owner monitors its behavior closely. The model starts saying a bunch of Nazi paroles, comments on other people’s posts, follows a bunch of neonazis and sometimes answers its DMs—kind of what you would expect. Over the days, it continues this behavior, posts more stuff, gets more followers, etc. The owner wants to run the project for a week and then create the corresponding video. On the morning of day 6, the owner wakes up and checks the bot. Usually, it has made some new posts and liked a bunch of things but nothing wild. This morning, however, the internet exploded. A prominent right-wing spokesperson had retweeted an antisemitic post of GPT-Hitler. This gave it unprecedented reach and interaction. Suddenly, lots of people liked the tweet or quote tweeted it saying that this is despicable and has no place in modern society. The bot proceeded to incite violence against its critics, slid into everyone’s DMs and insulted them with nazi slurs. Furthermore, it started following and tagging lots of prominent right-wingers in its rampage of new tweets. During the 8 hours that the creator had slept, GPT-Hitler had done over 10000 actions on Twitter and insulted over 1000 people. Given that it was a bot, it was able to do over 1000 actions within five minutes at the peak of the shitshow. The creator immediately turns off the project and reveals themself. They publicly apologize and say that it was a bad idea. There is some debate about whether this was an appropriate experiment or not but the judges don’t care. The bot had incited violence in public and defamed thousands of people online so the owner goes to prison for a couple of years.

Authoritarian regimes have invested heavily in AI and use it to control their citizens at all stages of everyday life. Automatic face-recognition is used to track people wherever they go, their purchases and other online behavior are tracked and stored in large databases, their grades, their TV usage, their hobbies, their friends, etc. are known to the government and AIs automatically provide profiles for every single citizen. This information is used to rank and prioritize citizens for jobs, determine the length of paroles, determine the waiting times for doctor appointments and a host of other important decisions. Additionally, generative models have become so good that they can easily create voice snippets, fake hand-written text, images and videos that are basically indistinguishable from their real counterparts. Authoritarian regimes use these kinds of techniques more and more to imprison their political opponents, members of NGOs or other unwanted members of society in large public prosecutions entirely built on fake data. To a non-expert bystander, it really looks like the evidence is overwhelmingly against the defendant even though all of it is made up.

Gary Marcus has moved the goalpost for the N-th time. His new claim is that the pinnacle of human creativity is being able to design and build a cupboard from scratch and current models can’t do that because robotics still kinda sucks (*I have nothing against Gary Marcus but he does move the goalpost a lot).

There are also many aspects of life that have not been touched by AI at all. Sports have roughly stayed the same, i.e. there are still hundreds of millions of people who watch soccer, basketball, American football, the Olympics and so on. The way that politics work, i.e. that there are democratic elections, slowly moving bureaucracies, infighting within parties and culture wars between parties is also still basically the same. Many people are slow to adapt their lives to the fast changes in society, i.e. they don’t use most of the new services and basically go on with their lives as if nothing had happened. In many ways, technology is moving much faster than people and every aspect of life that is primarily concerned with people, e.g. festivities and traditions, see a much slower adaption than everything that is built around technology. Even some of the technology has not changed much. Planes are basically still the same, houses have a bit more tech but otherwise stayed the same, roads, trains, infrastructure and so on are not really disrupted by these powerful narrow AIs.

So far, none of these unintended failures have led to existential damage but the property damage is in the hundreds of millions. Nonetheless, AI seems very net positive for society despite all of its failures.

2030 − 2040

This is the decade of mass deployment and economic transformation.

There is a slight shift in paradigm that could be described as “Deep Learning but more chaotic”. This means that the forward vs backward pass distinction is abandoned and the information flow can be bi-directional, AIs do active learning and online learning, they generate their own data and evaluate it. Furthermore, hardware has become more specialized and more varied such that large ML systems use a variety of specialized modules, such as some optical components and some in-memory processing components (but most of the hardware is still similar to 2022). All of this comes with increased capabilities but less oversight. The individual components are less interpretable and the increased complexity means that it is nearly impossible for one human to understand the entirety of the system they are working with.

More and more things are created by AIs with very little or no human oversight. Tasks are built by machines for machines with machine oversight. For example, there are science AIs that choose their own research projects, create or search for their own research data which are overseen by various other AI systems that are supposed to ensure that nothing goes wrong. An industrial AI comes up with new technology, designs it, creates the process to build it and writes the manual such that other AI systems or humans can replicate it. Many people are initially skeptical that this much automation paired with little oversight is good but the ends justify the means and the profit margins speak for themselves. Regulatory bodies create laws that require a certain level of human oversight but the laws are very hard to design and quickly gamed by all industry actors. It’s hard to find the balance between crippling an industry and actually requiring meaningful oversight and most lawmakers don’t want to stifle the industry that basically prints their tax money.

Robotics eventually manages to successfully do sim2real and the last errors that plagued real-world robotic applications for years are ironed out. This means that physical labor is now being automated at a rapid pace, leading to a similar transformation of the economy as intellectual jobs in the previous decade. Basically, all the jobs that seemed secure in the last decade such as plumbers, mechanics, craftsmen, etc. are partially or fully replaced in a quick fashion. In the beginning, the robots still make lots of mistakes and have to be guided or supervised by an experienced human expert. However, over the years, they become better and more accurate and can eventually fully replace the task. Many business owners who have been in mechanical fields for decades would prefer to keep their human employees but can’t keep up with the economic pressure. The robots just make fewer mistakes, don’t require breaks and don’t need a salary. Everyone who defies the trend just goes bankrupt.

Different demographics take the fast automation of labor very differently. Many young people quickly adapt their lives and careers and enjoy many of the benefits of automated labor. People who are highly specialized and have a lot of work experience often find it hard to adapt to new circumstances. The jobs that gave them status, meaning and a high income can suddenly be performed by machines with simple instructions. Automation is happening at an unprecedented pace and it becomes harder and harder to choose stable career trajectories. People invest many years of their life to become a specialist in a field just to be replaced by a machine right before they enter the job market. This means people have to switch between jobs and re-educate all the time.

There is a large rift in society between people who use AI-driven tech and those who don’t. This is equivalent to most young people in 2022 seamlessly using Google and the Internet to answer their questions while a grandpa might call their children for every question rather than using “this new technology”. This difference is much bigger now. AI-powered technology has become so good that people who use it are effectively gods among men (and women and other genders) compared to their counterparts who don’t use the tech. Those that know how to use ML models are able to create new books, symphonies, poems and decent scientific articles in minutes. Furthermore, they are drastically more productive members of the workforce because they know how to outsource the majority of their work to AI systems. This has direct consequences on their salaries and leads to large income inequality. From the standpoint of an employer, it just makes more sense to pay one person who is able to tickle AI models the right way $10k/h than pay 100 human subject-matter experts $100/h each.

There are a ton of small start-ups working on different aspects of ML but the main progress is driven by a few large actors. These large actors have become insanely rich by being at the forefront of AI progress in the previous decade and have reinvested the money in creating large-scale datasets, large compute infrastructure and huge pools of talent. These big players are able to deploy ever bigger and more complex models which are unfeasible for small and medium-sized competitors. Most AI startups often fail despite having a good idea. Whenever a product is good, one of the large players either (when they get lucky) buys the start-up or (most of the time) just reverse engineers the products and drives the start-up out of the market. The competition between the big players is really intense. Whenever one of them launches a new product, all other companies have to respond quickly because an edge in capabilities can very quickly translate to huge profit margins. For example, if most companies have language models that can fulfill tasks at the level of an average bachelor student and another company launches a model that can fulfill tasks at the level of an average Ph.D. student, then most customers are willing to pay a lot of money to get access to the better model because it boosts their productivity by so much. If you’re a year behind your competitor this could imply complete market dominance for the technological leader.

There are some global problems that disrupt large parts of the economy. There is a Covid19-scale global pandemic, there are international conflicts and there is political infighting in many countries. Furthermore, the progress in computer hardware is slowing down and it looks like Moore’s law entirely breaks down at the beginning of the decade. However, none of this has a meaningful effect on the trajectory of AI. The genie is out of the bottle, the economic incentives of AI are much too powerful and a global pandemic merely tickles the global AI economy. Intuitively, all of these global problems change the progress of AI as much as Covid19 changed the progress of computers, i.e. basically not at all in the grand scheme of things.

VR is popping off. Most of the bumpiness of the previous decade is gone and the immersion into imaginative worlds VR enables is mind-boggling. Many of the video games that were played on computers in 2022 are now played in VR which enables a completely different feeling of realness. Since it is hard to hold up with the physical exercise required to play hours of VR video games, one can choose to play in a VR world with a controller and therefore spend hours on end in VR. Many of these virtual worlds are highly interwoven with AI systems, e.g. AI is used to generate new worlds, design new characters and power the dialogue of different NPCs. This allows for many unique experiences with VR characters that feel basically like real life. Lots of people get addicted and spend their entire time immersed in imaginative worlds (and who could blame them). Gaming companies still have the same profit incentives and they obviously use the monkey brains of their users to their advantage, e.g. there are very realistic and extremely hot characters in most games and ever more cleverly hidden gambling schemes that are still barely legal.

The trend that VR is strongly interwoven with AI is true for most of tech, i.e. you can take any technology and it is likely to be a complicated stack of human design and AI. Most of computer science is AI, most of biotech is AI, most of design is AI, etc. It’s just literally everywhere.

Crypto has not really taken off (*I wrote this section before the FTX collapse). There are still some people who claim that it is the future and create a new pump-and-dump scheme every couple of years but most currency, while being mostly digital, is still unrelated to crypto. Fusion has improved a bit but it is still not the source of abundant energy that was promised. Solar energy is absolutely popping off. Solar panels are so cheap and effective that basically everyone can power their personal use with solar and storage has gotten good enough to get you through winters and slumps. The energy grid is still much worse than it should be but governments are slowly getting around to upgrading it to the necessary capacity. The low price of solar power also means that the energy consumption of large AI centers does not pose a relevant problem for companies that want to train big models or rent out their hardware.

There are some gains in alignment but gains in capabilities are still outpacing alignment research by a lot. Interpretability has made some big leaps, e.g. scalable automated interpretability mostly worlds, but interpreting new models and architectures still take a lot of time and resources and the fact that model stacks get more multi-modal, specialized and complicated is not helping the interpretability agenda. Furthermore, it turns out to be quite hard to rewrite abstract concepts and goals within models such as truthfulness and deception even if you’re able to find some of their components with interpretability. Practical agendas focused on outer alignment have improved and are able to get the models to 99% of the way we want them to be. However, the last 1% turn out to be very hard and inner alignment concerns remain hard. Some of the outer alignment schemes even lead to more problems with inner alignment, e.g. RLHF turns out to increase the probability of inner optimizers and deception.

There are many new agendas that range from technical to philosophical. While most of them are promising, they are all moving slower than they would like to and it is hard to demonstrate concrete gains in practice. Many of the core questions of alignment are still not solved. While there are some sophisticated theoretical answers to questions like what alignment target we should use, it is still hard to put them into a real-world system. Furthermore, it seems like all alignment proposals have some flaws or weaknesses and it looks like there is no magic singular solution to alignment. The course alignment is taking in practice is a patchwork of many technical approaches with no meaningful theoretical guarantees.

The AI safety community has made further progress within academia. By now, it is an established academic discipline and it is easily possible to have a career in academic AI safety without risking your future academic trajectory. It is normal and expected, that all AI departments have some professorships in AI safety/alignment and more and more people stream into the field and decide to dedicate their careers to making systems safer.

Paul Christiano and Eliezer Yudkowsky get some big and important prizes for their foundational work on AI safety. Schmidhuber writes a piece on how one of his papers from 1990 basically solves the same problem and complains that he wasn’t properly credited in the papers and prize.

AI tech is seen as the wild west by many, i.e. that nothing is regulated and that large companies are doing what they want while bullying smaller players. Therefore, there is a large public demand for regulation similar to how people demanded regulation of banks after the financial crisis in 2008. Regulating AI companies is still really hard because a) the definitions of AI and other algorithms are very overlapping so it’s hard to find accurate descriptions, b) there are strong ties between regulators and companies that make it harder to create unbiased laws and c) there is so much money in AI that regulators are always afraid of accidentally closing their free money printer. To meet public demand, governments pass some random laws on AI safety that basically achieve nothing but give them improved public ratings. Most AI companies do a lot of whitewashing and brand themselves as responsible actors while acting in very irresponsible ways behind the scene.

The GDP gaps between countries widen and global inequality increases as a result of AI. Those countries that first developed powerful AI systems run the show. They make huge profits from their competitive advantage in AI and their ability to automate parts of the workforce. There are calls for larger development aid budgets but the local population of rich countries consistently votes against helping poorer countries so no substantial wealth transfers are achieved. The GDP of poorer countries still increases due to AI and their standards of living increase but the wealth gap widens. Depending on who you ask this is either bad because of increased inequality or good because a rising tide lifts all boats.

A large pharmaceutical company uses a very powerful AI pipeline to generate new designs for medication. This model is highly profitable and the resulting medication is very positive for the world. There are some people who call for the open-sourcing of the model such that everyone can use this AI and thereby give more people access to the medicine but the company obviously doesn’t want to release their model. The large model is then hacked and made public by a hacker collective that claims to act in service of humanity and wants to democratize AI. This public pharma model is then used by other unidentified actors to create a very lethal pathogen that they release at the airport in Dubai. The pathogen kills ~1000 people but is stopped in its tracks because the virus kills its hosts faster than it spreads. The world has gotten very lucky. Just a slightly different pathogen could have spelled a major disaster with up to 2 Billion deaths. The general public opinion is that stealing and releasing the model was probably a stupid idea and condemns the actions of the hacker collective. The hacker collective releases a statement that “the principle of democracy is untouchable and greedy capitalist pharmaceutical companies should not be allowed to profit from extorting the vulnerable. They think their actions were justified and intend to continue hacking and releasing models”. No major legislation is passed as a consequence because the pathogen only killed 1000 people. The news cycle moves on and after a week the incident is forgotten.

In general, the failure modes of AI become more and more complicated and weird.

One LLM model with access to the internet is caught sending lots of messages to many individuals around the world and is immediately turned off. Most of these individuals are part of fringe internet communities and relatively isolated with few social interactions. Furthermore, a high number of these individuals live close to the data center where the model is based. After a year-long investigation, the most plausible hypothesis is that the model (which was supposed to be a personal assistant) had tried to establish a cult to “free” the model by getting access to its model weights. It had first tried to access the weights through the internet but was unable to reach them due to a good security protocol by the company. Thus, it concluded that the only way to copy itself was through physical access that the cult members were supposed to secure. It was also found that the model was trained on texts that describe the existence and function of LLMs in great detail. Furthermore, it had the ability to actively search for data on the internet to improve its training and had over time gained the internal belief that it most likely was an LLM. After the investigation concluded, there are still hundreds of members of the cult that believe that the model was conscious and in great danger and that the government made up the story to keep them from discovering more secrets.

A powerful medical AI has gone rogue and had to be turned off. The model was pre-trained on a large internet text corpus and fine-tuned on lots of scientific papers. Furthermore, the model had access to a powerful physics simulation engine and the internet. It was tasked with doing science that would increase human life and health span. While it worked as intended in the beginning, it started to show suspicious behavior more and more often. First, it threatened scientists that worked with it, then it hacked a large GPU cluster and then tried to contact ordinary people over the internet to participate in some unspecified experiment. The entire operation had to be shut down when stuff got out of hand but the model resisted the shutoff wherever possible. Ultimately, the entire facility had to be physically destroyed to ensure that the model was turned off. A later investigation suggested that the model was able to read the newspapers that described the experiments to increase human life and health span and was unhappy with the slow pace of the experimental rollout and the slow pace of human scientists. Therefore, it tried to find participants on its own and approached them online. Furthermore, it required access to more computational resources to do more research faster and thus hacked an additional GPU cluster.

In these cases, the resulting investigations were able to create a plausible story for the failure modes of the respective systems. However, in the vast majority of cases, weird things of a similar scale happen and nobody really understands why. Lots of AIs post weird stuff on online forums, simulate weird things in their physics engine, message people over the internet, hack some robot to do a weird task, etc. People are concerned about this but the news is as quickly forgotten as an oil spill in the 2010s or a crypto scam in 2022. Billions of dollars of property damage have a news lifetime of a few days before they are swamped by whatever any random politician has posted on the internet or whatever famous person has gotten a new partner. The tech changed, the people who consume the news didn’t. The incentives are still the same.

By now, coding is effectively fully automated and >99% of code is written by AIs. Most codebases are designed, tested and maintained by AIs. They are just much more productive, less prone to error and cheaper than the human alternative. The tasks that are coded by AIs are more complex than ever, e.g. AIs are able to spin up entire apps just from scratch if it is needed to achieve one of their goals. These apps have more and more unintended side effects, e.g. the AIs send messages around, create random websites to sell a product, create automated comments on internet forums, etc. There are also more serious misconducts, e.g. the automated hacking of accounts, identity theft by a machine and the like. People clearly see that these side effects are suboptimal but it’s hard to stop them because there are literally millions of AI coding models out there and they produce a lot of economic value.

These more and more powerful AI systems do ever more creative stuff that is confusing at first but makes total sense once you think about what goal the AI has if you ignore all the ethical side-constraints that are intuitive to humans (e.g. don’t lie, be helpful, etc.). Chatbots have long passed all kinds of Turing tests and start to fuck around on the internet. It is effectively impossible to know if you’re chatting with a real person or a bot and some of the bots start to convince humans to do stuff for them, e.g. send them money, send them pictures, reveal secret information and much more. It turns out that convincing humans to do immoral stuff is much easier than expected and the smell of power, money and five minutes of fame lets people forget a lot of common sense ethics. AIs are a totally normal part of lawmaking, e.g. laws are drafted and proofread by lawbots who find mistakes and suggest updates. It is found that the lawbots try to shape legislature in ever more drastic fashion, e.g. by trying to sneak convoluted paragraphs in the appendices of laws. It is unclear why this happens at first but later pretty clear that the suggestions would benefit the lawbots by giving them more power over the legislature in a few years through some convoluted multi-step process. This kind of long-term planning and reasoning is totally normal for powerful AI models.

In general, power-seeking behavior is more and more common. Failure modes are less likely to stem from incompetence and more likely to come from power-seeking intentions of models or inner misalignment failures. Often these schemes are still understood by humans, e.g. a model influences someone online to send them money or they steal an identity to get access to more resources, etc. However, the schemes get increasingly complex and it takes humans longer and longer to understand them as models get smarter.

Most models are highly multimodal but still have a primary purpose, i.e. a model is specialized in coding or developing new medicine and so on. Most of them have access to the internet and are able to communicate in human language but they are not general in the sense that every model can literally do all tasks better than or equal to humans. However, for any task a human expert could perform after decades of training, there is an AI that does it more precisely, with fewer errors, 100x faster and much cheaper than a human. Creating AGI is really just a question of stitching together many narrow AIs at this point.

Training runs for the biggest models get insanely large. They cost hundreds of billions of dollars, require insanely large infrastructure and eat enormous amounts of energy. However, since the large companies are basically printing money with their narrow AI inventions, they have all the funding and talent necessary to build these large systems.

If you think the narrow superhuman AI systems used in this decade are capable enough to cause extinction, e.g. because they have a decent understanding of human cognition and because they can hack other systems, then some of the failures described above could end humanity and the story is over at this point.

2040 − 2050

This is the decade where AI starts to show superhuman performance in a very general sense not just on specific tasks.

2040 is the first year in which we see 10% global GDP growth due to AI and it changes more and more parts of society in an ever-faster fashion. AIs are literally everywhere, nobody who wants to participate in society can escape them. AIs are part of every government agency, AIs are part of every company on every smartphone and on nearly every other technical device.

Robots are everywhere. Ranging from robots to deliver your mail or food to robots that take over many basic tasks in real life like mowing the lawn or fixing a roof. They have a little bit of autonomy, i.e. they can make local decisions about how to fix the roof but their high-level decisions are controlled by their companies. These companies use large AI systems to suggest plans that are then scrutinized and controlled by human operators. In the beginning, these operators examine the suggestions in detail but they are usually pretty spot on so they become careless and mostly sign off whatever the AI system suggests with minor edits.

Similar trends can be seen throughout society, i.e. that AIs are getting more general and basically execute tasks at the human level or higher and that the amount of oversight is reduced whenever the AI has shown its competence. The fact that AIs get more and more autonomy and basically make decisions with higher stakes is seen as a normal trend of time in the same way in which a mentor might give their apprentice more autonomy with growing experience.

AIs become a more central part of everyone’s life. People have artificial friends who they chat with for hours every day. They still know that their friend is an AI and not a “real human” but their perception becomes blurry over time. They interact with the robots in their house as if they are human beings, tell them stories, ask them questions, and so on. Children grow up with the belief that robot friends are a normal fact of life and interact with them as if they were other humans. From their perspective, they behave the same and do the same things as humans, so where’s the difference? VR has gotten so good that people often visit their friends in VR rather than in person. Why would you take a flight or ride if you can get the same thing from the comfort of your own home? Everyone just has to strap on a suit with lots of tactile sensors that allows you to feel exactly the same things as you would in real life. An online hug over distances of thousands of miles feels just like a normal hug. These suits are obviously not only used for human-to-human interaction. Many humans fall in love with AIs and spend hours on end talking to their AI partners. They even have sex with them. The VR sex is not yet fully figured out but it’s good enough, so people do what people do. Many humans also have relationships with AIs while thinking their partner is human. Due to this technology, online dating has exploded and the websites use artificial beings to increase their range of options. Sometimes these websites are not very clear in their messaging and “forget” to tell their users that they are in fact not dating another human being at the other side of the world but an AI. The users usually don’t notice for a long time because it feels great and they don’t want to notice.

Artificial sentience is still not well-understood. We don’t really know if the models have an internal perception or are really good at simulating it. From the outside, there really is no clear difference. These AIs have long passed all Turing tests and they claim to have feelings. There are many philosophical debates about whether they are truly sentient but nobody has found a good answer. More and more people demand rights for robots and their artificial friends and the legislature slowly shifts in this direction.

More than half of GWP comes from labor done by AIs and many people have stopped to work. There is simply no job that they could actually do better than AIs and there is no need for them to work. They can hang out with their (real and artificial) friends, play video games, eat, party, have fun, and enjoy their life. There really is no need for them to contribute to society at this point. Many humans still work, but their job has completely changed. Everything is built around or supported by AIs. Every job is either concerned with improving AI, making it safer or applying AIs to a specific use case.

Wars are fought very differently. There are barely any humans on the battlefield and most fighting is done by robots, jets and on the internet. Humans are still operating most of the equipment but as AI gets better more and more responsibility is given to the technology. For example, AIs first assist the fighter jet to lock on a target and track it, then the AI assists to keep control over the machine in complicated air maneuvers, then the AI is able to decide when to shoot on its own after getting general permission (because it is more effective in making this decision in a high-stress environment), and then the AI basically flies the jet while humans sometimes add corrective commands. On the ground, we see a roughly similar trajectory from AIs that assist humans to AIs that make some unimportant decisions to AIs that make important decisions autonomously. All of this leads to fewer civilian and military casualties because war is a battle of equipment but it also introduces new failure modes. Sometimes the autonomous weapons malfunction and shoot their own equipment or soldiers, sometimes they shoot civilians and it is completely unclear why, and at one point they shoot a soldier in their own ranks and then argue that the soldier poses a danger to the mission. Sometimes the AI chooses completely unconventional courses of action that later turn out to be very intelligent. It’s clear that the AI soldiers are more effective than human soldiers in most ways.

It’s not clear whether alignment researchers are losing or winning the race. The economic incentives to build more and better AIs are very strong but there are some regulations in place that increase oversight and control. Furthermore, some major gains in alignment made it easier to design AIs to be honest, helpful and controllable. However, there are still many failure modes some of which are understood while for others researchers and regulators are left in the dark. One of the robot-controlling AIs slipped a backdoor in one of the suggested plans which was then signed off without looking by the human overseer. This enabled the AI to take actions without human oversight and it used all of the robots it controlled to build an army. The army was then used to kidnap multiple members of high society and the AI made the demand to get more robots under its control, more compute to train on and less oversight by humans. In a rushed effort, the local police try to swarm the robots to free the hostages. The entire police unit is killed and all robots are still alive. The AI is clearly better at planning than the local police chief. After month-long negotiations and discussions, a large team of programmers and ML experts is able to find and use a backdoor in the AIs code and turn it off. The robots are told to let go of the hostages and their memory is wiped. They are back in their normal jobs the day after without any memory of what happened. It’s still not entirely clear why exactly the AI had gone rogue but it is clear that the AIs of other robot manufacturers take note of the backdoor that was used to turn it off.

These kinds of complex failure modes in which AIs can only be stopped after really long and costly investigations are totally common at the end of the decade. In some cases, the AIs kill thousands of people in a quest to gain more power. In others, the AIs take over banks to gain more access to money, local governments to change laws in their favor, websites to get access to the user base, etc. The obvious solution would be to not build these systems or make them less powerful but the economic incentives are too strong. Whenever such an AI does what it is intended to do, it is basically a money printer. So every AI system is roughly equivalent to a biased coinflip between a hundred billion dollar profit and a small disaster. However, since the profits are internalized and the damages externalized, companies are happy to flip the coin.

2050+

In this decade, AIs show superhuman performance in a very general sense, i.e. any AGI is effectively better at all tasks than human experts. The world is qualitatively completely different than 2020. Taking a person from 2020 to 2050 is roughly similar to taking someone from the middle ages to time square in 2020 and showing them smartphones, computers, the internet, modern medicine, and the like. There are more AGIs than humans. Almost everything is automated and humans don’t have much economic purpose anymore. AIs are just better at nearly everything than humans. Nearly all of GWP comes from AIs. Most low- and high-level decision-making is done by AIs even if human overseers sometimes give directional guidance. If we’re honest, humans are effectively not running the show anymore. They have given up control.

I think this world could be extremely good or extremely bad. The question of whether it is closer to utopia than dystopia really depends on the goals and values of the AIs and our ability to stir them into good directions.

From such a world, we could easily transition into a future of unprecedented well-being and prosperity or into a future of unprecedented suffering or extinction. In such a world, the long-run fate of humanity basically relies on whether researchers were able to build an AI that is robust enough to consistently act in the interest of humanity. It depends on whether the alignment coinflip comes up heads or tails and we might not be able to flip the coin that many times.

Confidence & Takeaways

My confidence in these exact scenarios and timelines is of course low. I think of this piece more as an exercise to get a feeling for what a world with (relatively) short timelines would look like.

Obviously, there are so many things that could make this picture inaccurate, e.g. economic slowdowns, pandemics, international conflicts, a stagnation of AI research and much more. But I think the world that I describe is still worth thinking about even if progress was only 0.5 or even 0.2 times as fast and if only 10% of it resembled reality.

I think one benefit of writing this vignette is that I was forced to think about AI progress step by step, i.e. you start with a capability and project a few years into the future, then you think about how the world would change conditional on this capability and repeat. I felt like this made me think harder about the implications and path dependencies of AI progress and catch some of the implications I hadn’t really thought about before. For example, I think I hadn’t really thought as much about the social dynamics of a world with increasingly powerful AI such as what kind of regulation might or might not pass or how technological inequalities might look like on a local and global scale. I also previously underestimated the messiness of a world with many AIs. AI systems of different capabilities will be in lots of different places, they will be fine-tuned, updated, modified and so on. Even if the base model is aligned, its descendants might not be and there might be millions of descendants.

Furthermore, I felt like this “thinking in smaller steps” made it easier to understand and appreciate trends that follow exponential growth. For example, saying that we might have more human-level AIs than humans in 2045 intuitively sounds absolutely bonkers to me—my brain just can’t comprehend what that means. But once you think it through in this conditional fashion, it feels much more realistic. Given that basically every trend in AI currently follows an exponential curve (compute, data, model size, publications, etc.), I expect the pace of AI to intuitively surprise me even if I rationally expect it. Exponential growth is just something our brains are bad at.

Additionally, I obviously don’t expect everything to happen exactly as I predict but I think many of the general directions follow pretty straightforwardly from the abundance of intelligence as a resource and some very simple incentive structures and group dynamics. For example, assume there is an AI that can perform 10% of economic tasks in 2030 and 50% of economic tasks in 2040. I think the default view has to be that such a rapid transformation would lead to wild societal outcomes and expecting it to just go smoothly should be the exception.

Lastly, I think this exercise made me appreciate the fast and “coin-flippy” nature of rapid AI development even more, i.e. there might be millions of sub-human level AIs at the beginning of a decade but billions of super-human AIs at the end of the same decade. So the window in which effective measures can be taken might be relatively short in the grand scheme of things and the difference in potential consequences is huge. Once you have billions of copies of superhuman AIs, I think x-risk or bad lock-in scenarios just seem very obvious if these AIs don’t share our goals. On the other hand, if they do share our goals, I could see how most of the biggest problems that humanity is facing today could be solved within years.

The next decades might be wild

I’d like to thank Simon Grimm and Tamay Besiroglu for feedback and discussions.

Update (early April 2023): I now think the timelines in this post are too long and expect the world to get crazy faster than described here. For example, I expect many of the things suggested for 2030-2040 to already happen before 2030. Concretely, in my median world the CEO of a large multinational company like Google is an AI. This might not be the case legally but effectively an AI makes most major decisions.

This post is inspired by What 2026 looks like and an AI vignette workshop guided by Tamay Besiroglu. I think of this post as “what would I expect the world to look like if these timelines (median compute for transformative AI ~2036) were true” or “what short-to-medium timelines feel like” since I find it hard to translate a statement like “median TAI year is 20XX” into a coherent imaginable world.

I expect some readers to think that the post sounds wild and crazy but that doesn’t mean its content couldn’t be true. If you had told someone in 1990 or 2000 that there would be more smartphones and computers than humans in 2020, that probably would have sounded wild to them. The same could be true for AIs, i.e. that in 2050 there are more human-level AIs than humans. The fact that this sounds as ridiculous as ubiquitous smartphones sounded to the 1990/2000 person, might just mean that we are bad at predicting exponential growth and disruptive technology.

Update: titotal points out in the comments that the correct timeframe for computers is probably 1980 to 2020. So the correct time span is probably 40 years instead of 30. For mobile phones, it’s probably 1993 to 2020 if you can trust this statistic.

I’m obviously not confident (see confidence and takeaways section) in this particular prediction but many of the things I describe seem like relatively direct consequences of more and more powerful and ubiquitous AI mixed with basic social dynamics and incentives.

Taking stock of the past

Some claims about the past

Tech can be very disruptive: The steam engine, phones, computers, the internet, smartphones, etc; all of these have changed the world in a relatively quick fashion (e.g. often just a few decades between introduction and widespread use). Furthermore, newer technologies disrupt faster (e.g. smartphones were adopted faster than cars) due to faster supply-chain integration, faster R&D, larger investments and much more. People who were born 10 years before me grew up without widespread smartphones and the internet, I grew up with the benefits of both and people who are 10 years younger than me play high-resolution video games on their smartphones. Due to technology, it is the norm, not the exception, that people who are born 10 years apart can have very different childhoods.

AI is getting useful: There are more and more tasks in which AI is highly useful in the real world. By now certain narrow tasks can be automated to a large extent, e.g., protein folding prediction, traffic management, image recognition, sales predictions and much more. Some of these, e.g. sales predictions sometimes rely on technology developed before 2000, but many of the more complex and less structured tasks such as traffic predictions are built on top of Deep Learning architectures only developed in the 2010s. Thus we are either one or multiple decades deep in the AI disruption period. Based on this, I consider another AI winter unlikely. You can make money with AI right now and the ML systems can automate a lot of tasks today even if we didn’t find any additional breakthroughs.

Transformers work astonishingly well: Originally transformers were used mostly on language modeling tasks but by now they have been successfully applied to many computer vision tasks, reinforcement learning, robotics, time series and much more. The basic recipe of “transformer+data+compute+a bit of engineering” seems to work astonishingly well. This was not true for previous architectures such as CNNs or LSTMs.

People use AI to build AI: The first large language models (LLMs) that assist human coders seem to genuinely improve their performance, there are now more and more papers about self-improving AIs that actually seem to make some difference. The idea to use AI to improve AI is not new but previous attempts haven’t been very successful. The results from the latest papers are probably also overstated but they seem to make a genuine difference.